Bayesian Programming and Learning for Multi-Player

Video Games

Application to RTS AI

Ph.D thesis

Gabriel Synnaeve

���

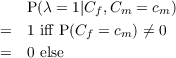

Abstract

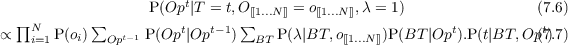

This thesis explores the use of Bayesian models in multi-player video

games AI, particularly real-time strategy (RTS) games AI. Video games are

in-between real world robotics and total simulations, as other players are not

simulated, nor do we have control over the simulation. RTS games require having

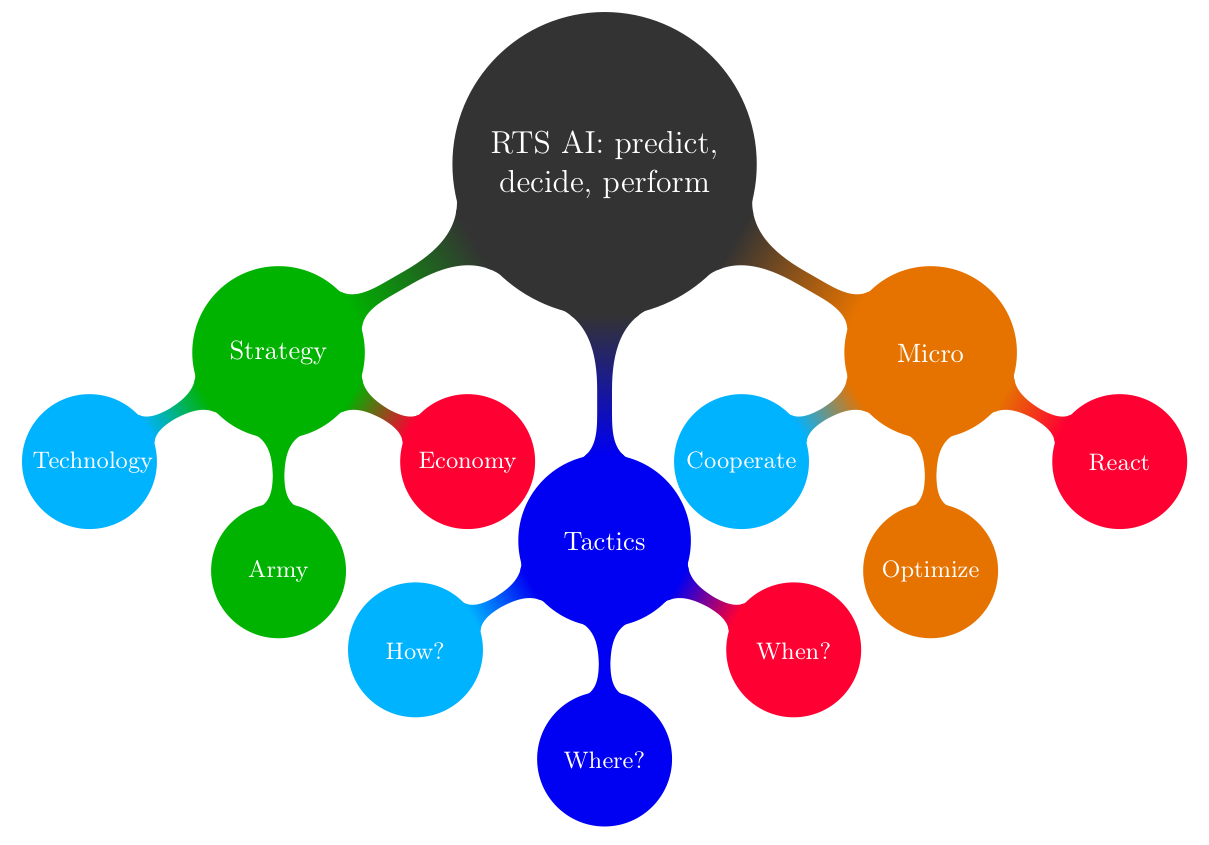

strategic (technological, economical), tactical (spatial, temporal) and reactive (units

control) actions and decisions on the go. We used Bayesian modeling as an

alternative to (boolean valued) logic, able to cope with incompleteness of

information and (thus) uncertainty. Indeed, incomplete specification of the possible

behaviors in scripting, or incomplete specification of the possible states in

planning/search raise the need to deal with uncertainty. Machine learning

helps reducing the complexity of fully specifying such models. We show that

Bayesian programming can integrate all kinds of sources of uncertainty (hidden

state, intention, stochasticity), through the realization of a fully robotic

StarCraft player. Probability distributions are a mean to convey the full

extent of the information we have and can represent by turns: constraints,

partial knowledge, state space estimation and incompleteness in the model

itself.

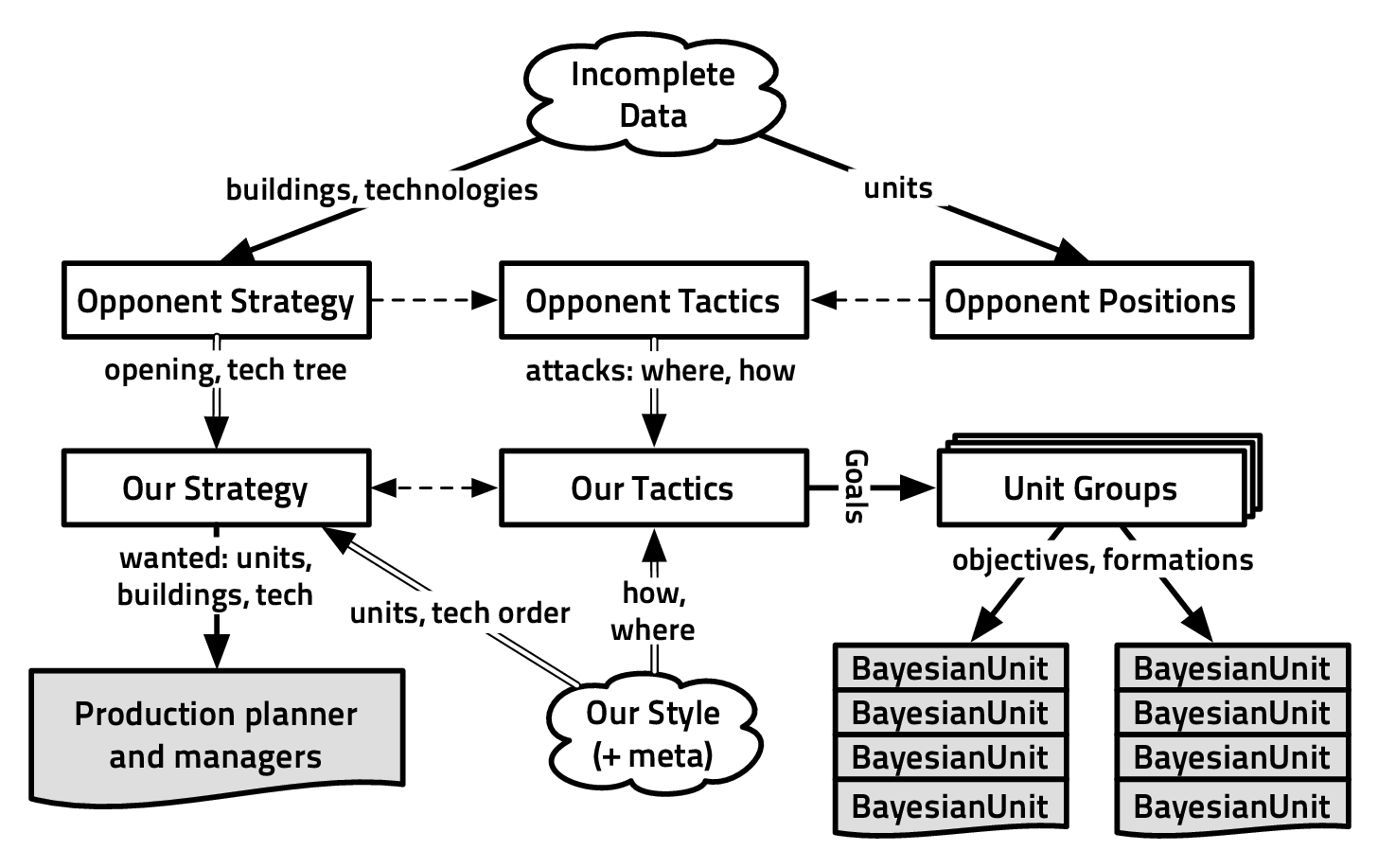

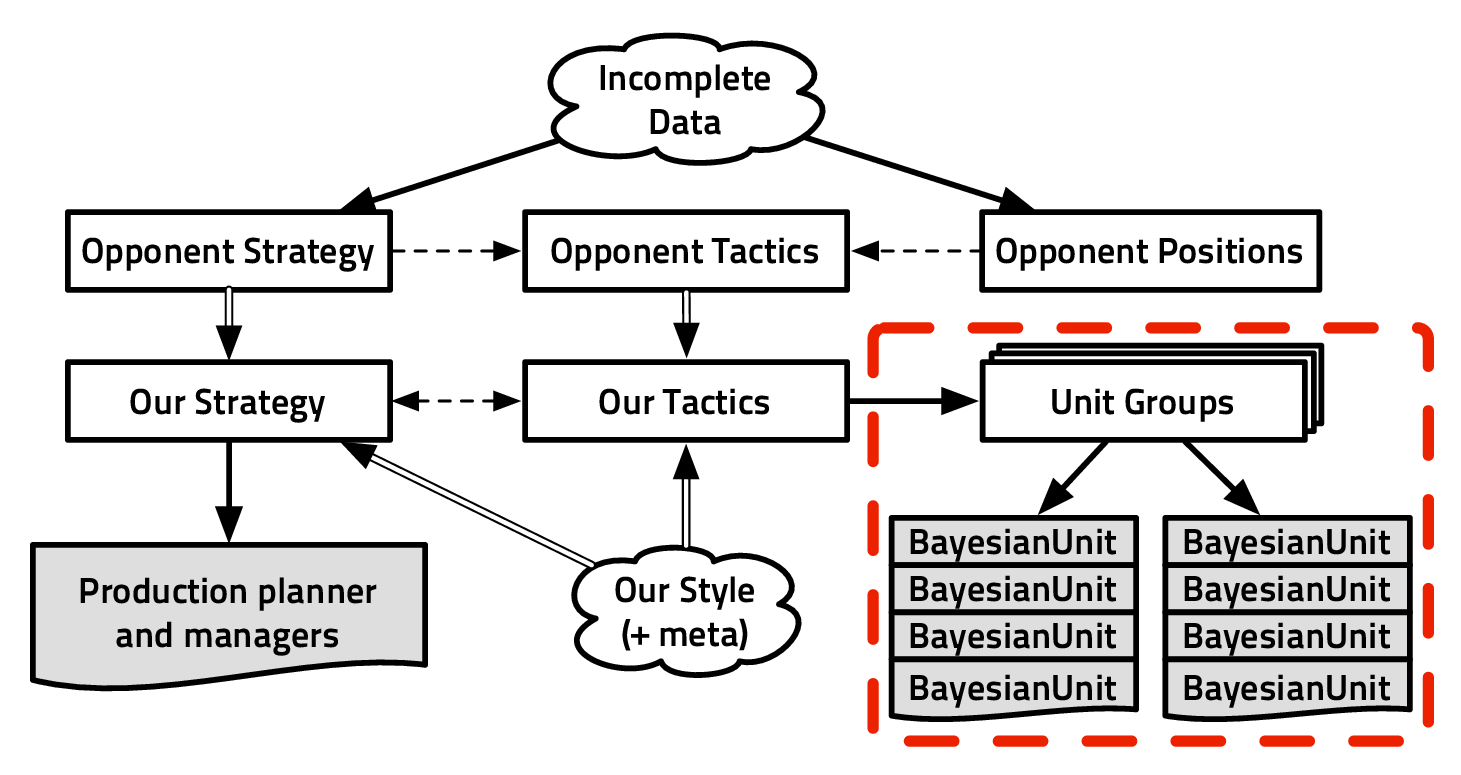

In the first part of this thesis, we review the current solutions to problems raised by

multi-player game AI, by outlining the types of computational and cognitive

complexities in the main gameplay types. From here, we sum up the transversal

categories of problems, introducing how Bayesian modeling can deal with all of them.

We then explain how to build a Bayesian program from domain knowledge and

observations through a toy role-playing game example. In the second part of the

thesis, we detail our application of this approach to RTS AI, and the models that we

built up. For reactive behavior (micro-management), we present a real-time

multi-agent decentralized controller inspired from sensory motor fusion. We

then show how to perform strategic and tactical adaptation to a dynamic

opponent through opponent modeling and machine learning (both supervised

and unsupervised) from highly skilled players’ traces. These probabilistic

player-based models can be applied both to the opponent for prediction, or to

ourselves for decision-making, through different inputs. Finally, we explain our

StarCraft robotic player architecture and precise some technical implementation

details.

Beyond models and their implementations, our contributions are threefolds:

machine learning based plan recognition/opponent modeling by using the structure of

the domain knowledge, multi-scale decision-making under uncertainty, and

integration of Bayesian models with a real-time control program.

Contents

Notations

Symbols

| ← | assignment of value to the left hand operand |

| ~ | the right operand is the distribution of the left operand (random variable) |

| ∝ | proportionality |

| ≈ | approximation |

| # | cardinal of a space or dimension of a variable |

| ∩ | intersection |

| ∪ | union |

| ∧ | and |

| ∨ | or |

| MT | M transposed |

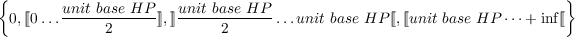

| ⟦ ⟧ | integers interval |

| |

Variables

| X and V ariable | random variables (or logical propositions) |

| x and value | values |

#s =  | cardinal of the set s |

#X =  | shortcut for “cardinal of the set of the values of X” |

| X1:n | the set of n random variables X1…Xn |

| {x ∈ Ω|Q(x)} | the set of elements from Ω which verify Q |

| |

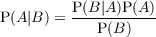

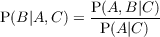

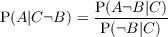

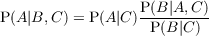

Probabilities

| P(X) = Distribution | is equivalent to X ~ Distribution |

| P(x) = P(X = x) = P([X = x]) | Probability (distribution) that X takes the value x |

| P(X,Y ) = P(X ∧ Y ) | Probability (distribution) of the conjunction of X and Y |

| P(X|Y ) | Probability (distribution) of X knowing Y |

| |

Conventions

Chapter 1

Introduction

Every game of skill is susceptible of being played by an automaton.

|

1.1 Context

1.1.1 Motivations

There is more to playing than entertainment, particularly, playing has been found to

be a basis for motor and logical learning. Real-time video games require

skill and deep reasoning, attributes respectively shared by music playing

and by board games. On many aspects, high-level real-time strategy (RTS)

players can be compared to piano players, who would write their partition

depending on how they want to play a Chess match, simultaneously to their

opponent.

Research on video games rests in between research on real-world robotics and

research on simulations or theoretical games. Indeed artificial intelligences (AI) evolve

in a simulated world that is also populated with human-controlled agents and other

AI agents on which we have no control. Moreover, the state space (set of states

that are reachable by the players) is much bigger than in board games. For

instance, the branching factor* in StarCraft is greater than 1.106, compared to

approximatively 35 in Chess and 360 in Go. Research on video games thus

constitutes a great opportunity to bridge the gap between real-world robotics and

simulations.

Another strong motivation is that there are plenty of highly skilled human video

game players, which provides inspiration and incentives to measure our artificial

intelligences against them. For RTS* games, there are professional players, whose

games are recorded. This provides datasets consisting in thousands human-hours of

play, by humans who beat any existing AI, which enables machine learning. Clearly,

there is something missing to classical AI approaches to be able to handle video

games as efficiently as humans do. I believe that RTS AI is where Chess AI was in

the early 70s: we have RTS AI world competitions but even the best entries cannot

win against skilled human players.

Complexity, real-time constraints and uncertainty are ubiquitous in video games.

Therefore video games AI research is yielding new approaches to a wide range of

problems. For instance in RTS* games: pathfinding, multiple agents coordination,

collaboration, prediction, planning and (multi-scale) reasoning under uncertainty.

The RTS framework is particularly interesting because it encompasses most of these

problems: the solutions have to deal with many objects, imperfect information,

strategic reasoning and low-level actions while running in real-time on desktop

hardware.

1.1.2 Approach

Games are beautiful mathematical problems with adversarial, concurrent and

sometimes even social dimensions, which have been formalized and studied through

game theory. On the other hand, the space complexity of video games make them

intractable problems with only theoretical tools. Also, the real-time nature of the

video games that we studied asks for efficient solutions. Finally, several video games

incorporate different forms of uncertainty, being it from partial observations or

stochasticity due to the rules. Under all these constraints, taking real-time

decisions under uncertainties and in combinatorics spaces, we have to provide a

way to program robotic video games players, whose level matches amateur

players.

We have chosen to embrace uncertainty and produce simple models which can

deal with the video games’ state spaces while running in real-time on commodity

hardware: All models are wrong; some models are useful. (attributed to Georges

Box). If our models are necessarily wrong, we have to consider that they are

approximations, and work with probabilities distributions. The other reasons to do so

confirm us in our choice:

- Partial information forces us to be able to deal with state uncertainty.

- Not only we cannot be sure about our model relevance, but how can we

assume “optimal” play from the opponent in a so complex game and so

huge state space?

The unified framework to reason under uncertainty that we used is the one of plausible

reasoning and Bayesian modeling.

As we are able to collect data about high skilled human players or produce data

through experience, we can learn the parameters of such Bayesian models. This

modeling approach unifies all the possible sources of uncertainties, learning, along

with prediction and decision-making in a consistent framework.

1.2 Contributions

We produced tractable models addressing different levels of reasoning, whose

difficulty of specification was reduced by taking advantage of machine learning

techniques, and implemented a full StarCraft AI.

- Models breaking the complexity of inference and decision in games:

- We showed that multi-agent behaviors can be authored through

inverse programming (specifying independently sensor distribution

knowing the actions), as an extension of [Hy, 2007]. We used

decentralized control (for computational efficiency) by considering

agents as sensory motor-robots: the incompleteness of not

communicating with each others is transformed into uncertainty.

- We took advantage of the hierarchy of decision-making in games

by presenting and exploiting abstractions for RTS games (strategy

& tactics) above units control. Producing abstractions, being it

through heuristics or less supervised methods, produces “bias” and

uncertainty.

- We took advantage of the sequencing and temporal continuity in

games. When taking a decision, previous observations, prediction

and decisions are compressed in distributions on variables under the

Markovian assumption.

- Machine learning on models integrating prediction and decision-making:

- We produced some of our abstractions through semi-supervised or

unsupervised learning (clustering) from datasets.

- We identified the parameters of our models from human-played

games, the same way that our models can learn from their opponents

actions / past experiences.

- An implementation of a competitive StarCraft AI able to play full games with

decent results in worldwide competitions.

Finally, video games is a billion dollars industry ($65 billion worldwide in 2011).

With this thesis, we also hope to deliver a guide for industry practitioners who would

like to have new tools for solving the ever increasing state space complexity of

(multi-scale) game AI, and produce challenging and fun to play against

AI.

1.3 Reading map

First, even though I tried to keep jargon to a minimum, when there is a precise word

for something, I tend to use it. For AI researchers, there is a lot of video games’

jargon; for game designers and programmers, there is a lot of AI jargon. I have put

everything in a comprehensive glossary.

Chapter 2 gives a basic culture about (pragmatic) game AI. The first part

explains minimax, alpha-beta and Monte-Carlo tree search by glossing over

Tic-tac-toe, Chess and Go respectively. The second part is about video games’ AI

challenges. The reader novice to AI who wants a deep introduction on artificial

intelligence can turn to the leading textbook [Russell and Norvig, 2010]. More

advanced knowledge about some specificities of game AI can be acquired by reading

the Quake III (by iD Software) source code: it is very clear and documented modern

C, and it stood the test of time in addition to being the canonical fast first person

shooter. Finally, there is no substitute for the reader novice to games to play them in

order to grasp them.

We first notice that all game AI challenge can be addressed with uncertain

reasoning, and present in chapter 3 the basics of our Bayesian modeling formalism.

As we present probabilistic modeling as an extension of logic, it may be an easy entry

to building probabilistic models for novice readers. It is not sufficient to give a strong

background on Bayesian modeling however, but there are multiple good books on the

subject. We advise the reader who want a strong intuition of Bayesian modeling to

read the seminal work by Jaynes [2003], and we found the chapter IV of the

(free) book of MacKay [2003] to be an excellent and efficient introduction

to Bayesian inference. Finally, a comprehensive review of the spectrum of

applications of Bayesian programming (until 2008) is provided by [Bessière

et al., 2008].

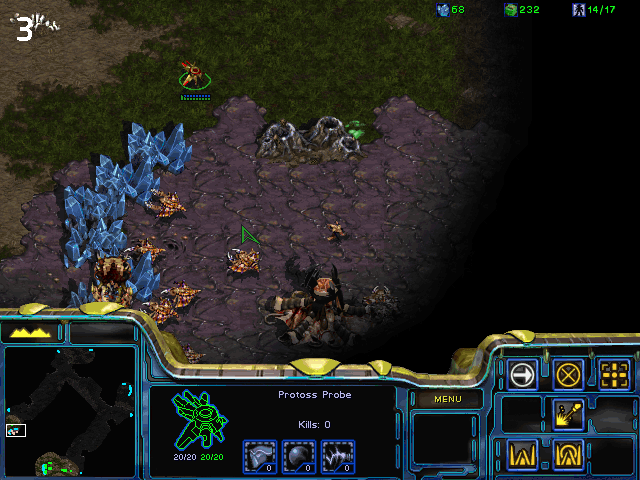

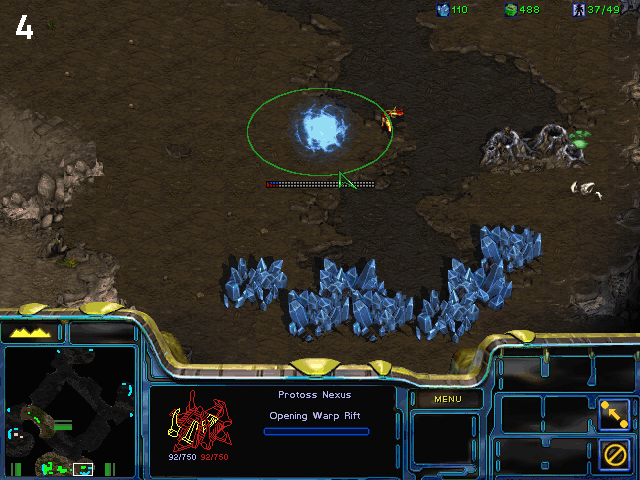

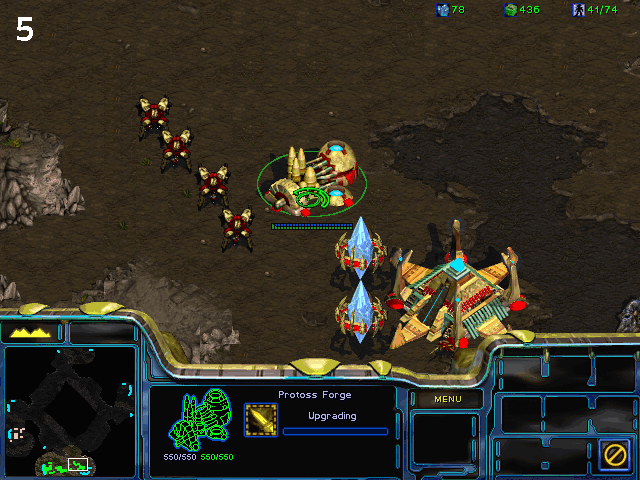

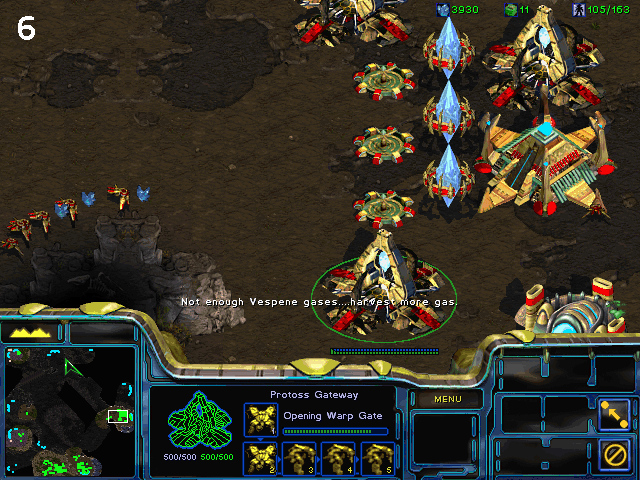

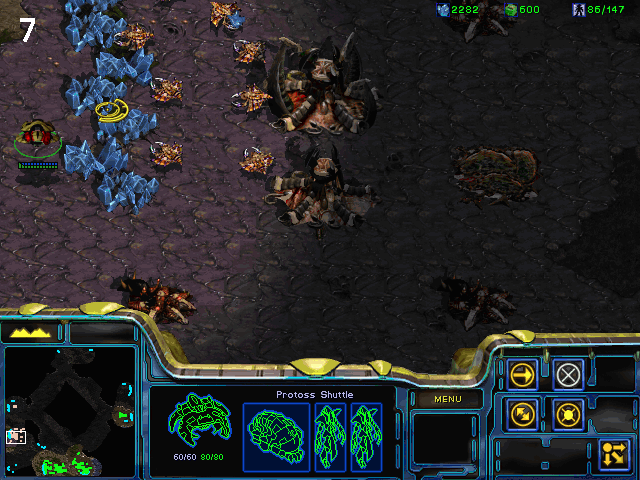

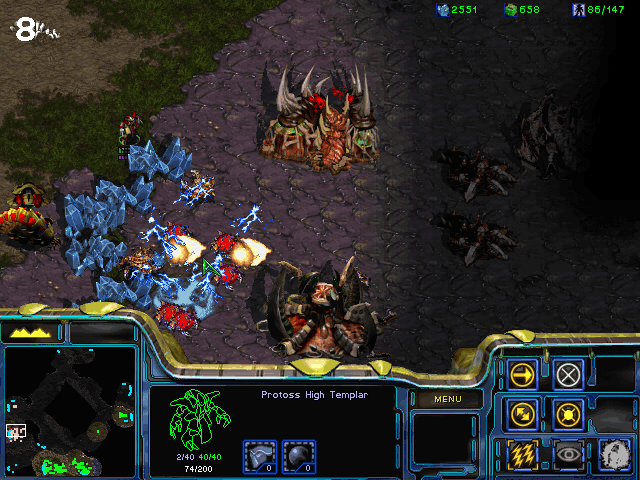

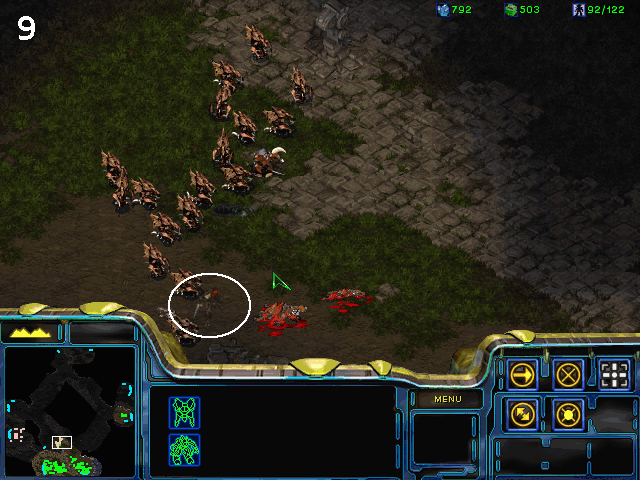

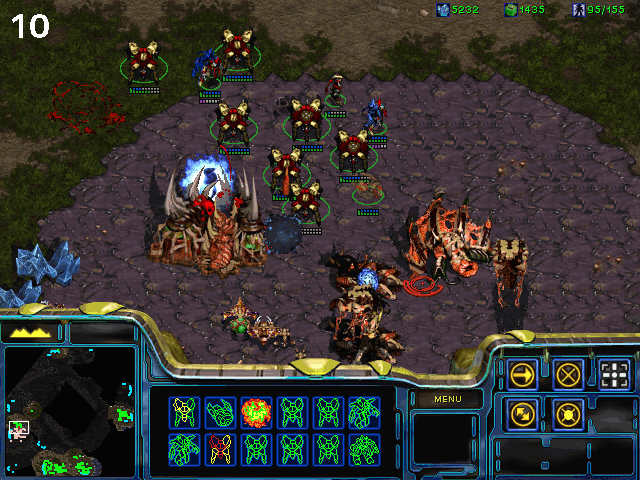

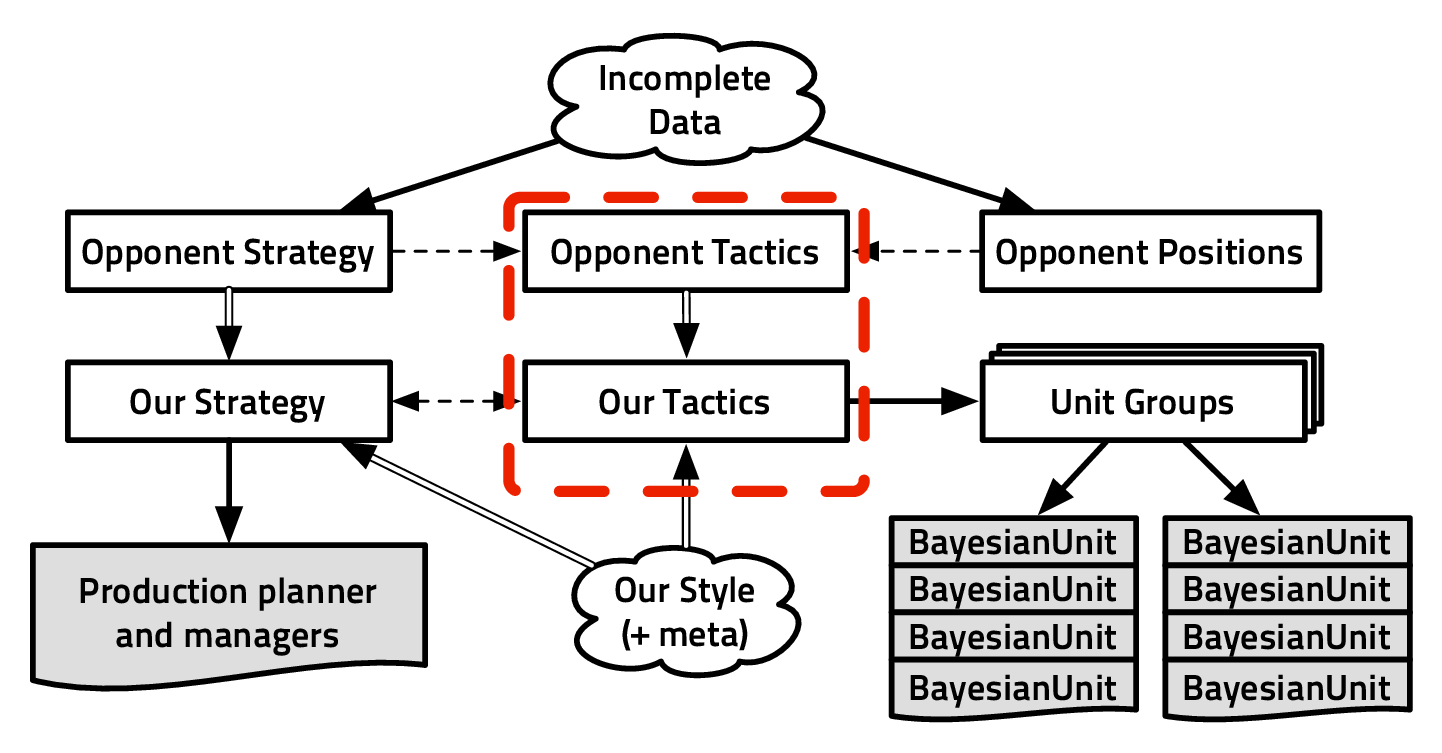

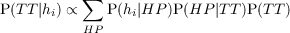

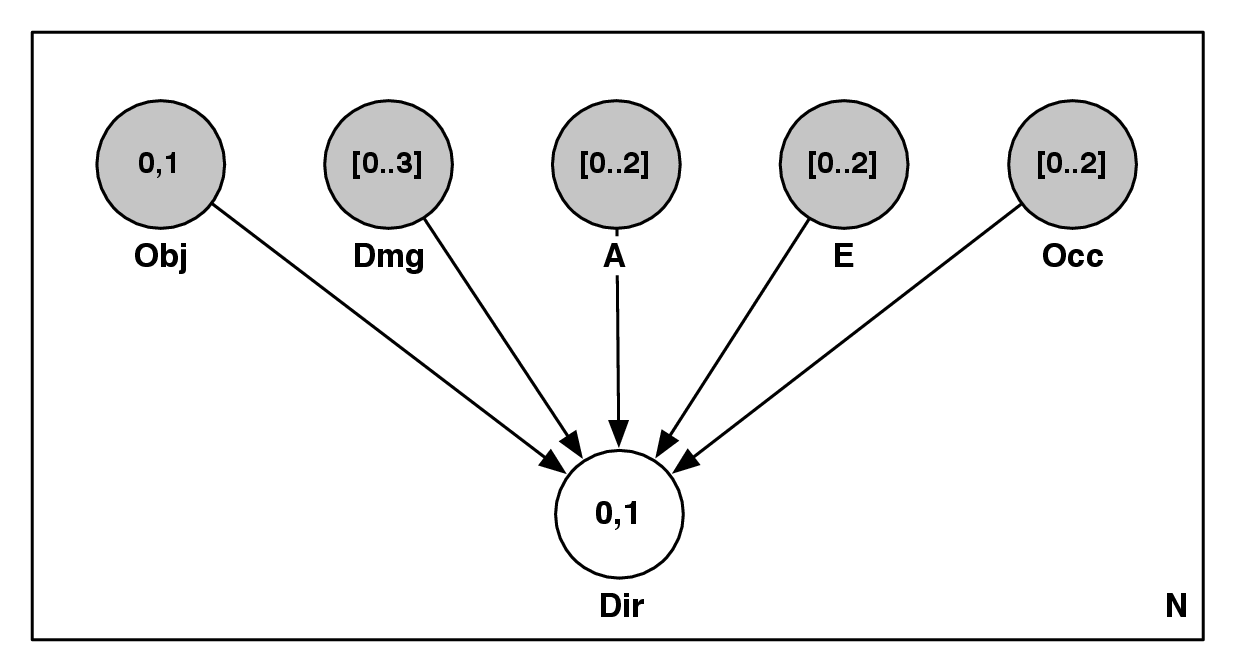

Chapter 4 explains the challenges of playing a real-time strategy game through

the example of StarCraft: Broodwar. It then explains our decomposition of the

problem in the hierarchical abstractions that we have studied.

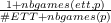

Chapter 5 presents our solution to the real-time multi-agent cooperative and

adversarial problem that is micro-management. We had a decentralized reactive

behavior approach providing a framework which can be used in other games than

StarCraft. We proved that it is easy to change the behaviors by implementing several

modes with minimal code changes.

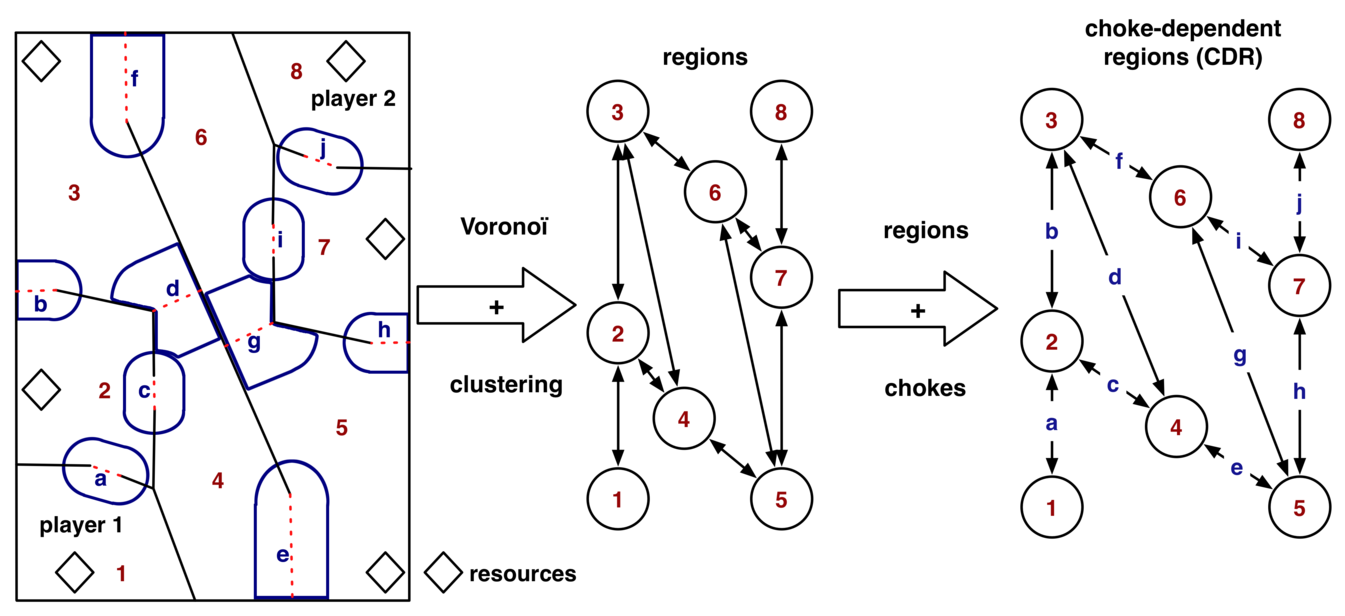

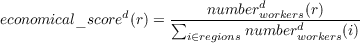

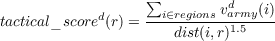

Chapter 6 deals with the tactical abstraction for partially observable games. Our

approach was to abstract low-level observations up to the tactical reasoning level

with simple heuristics, and have a Bayesian model make all the inferences at this

tactical abstracted level. The key to producing valuable tactical predictions

and decisions is to train the model on real game data passed through the

heuristics.

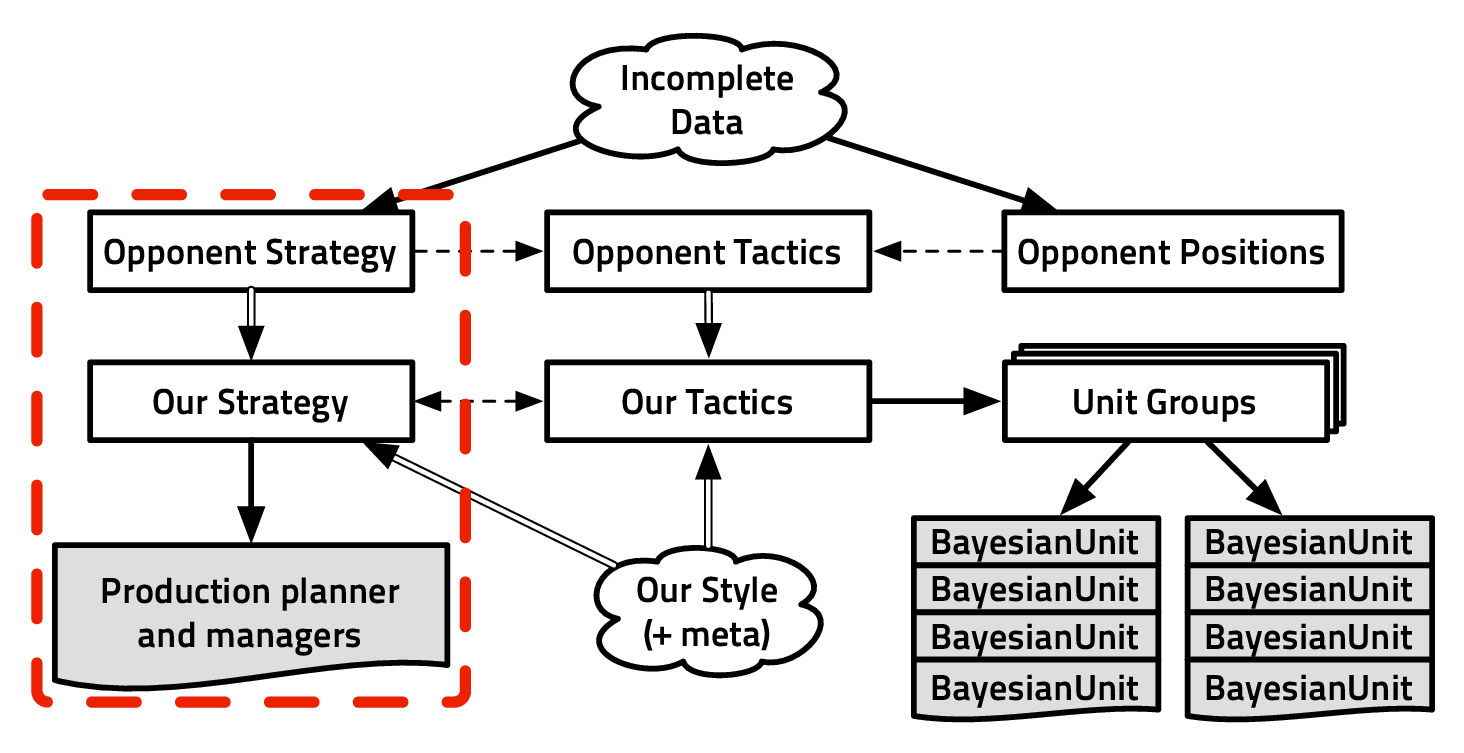

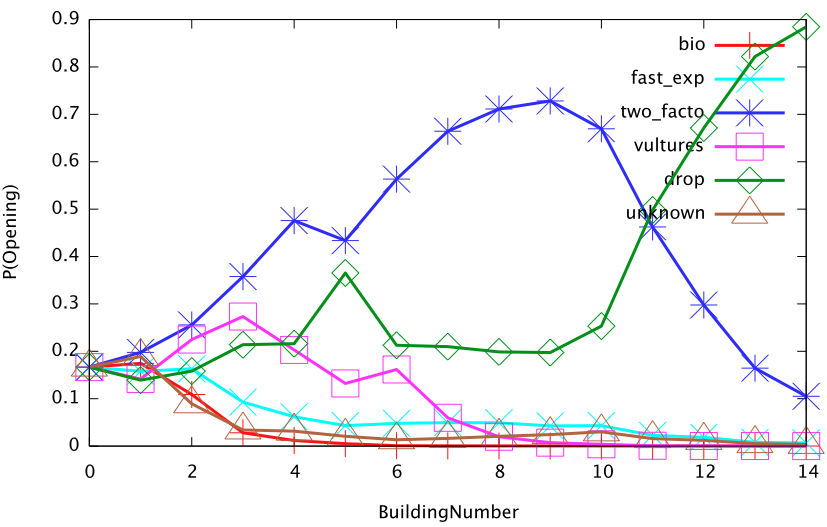

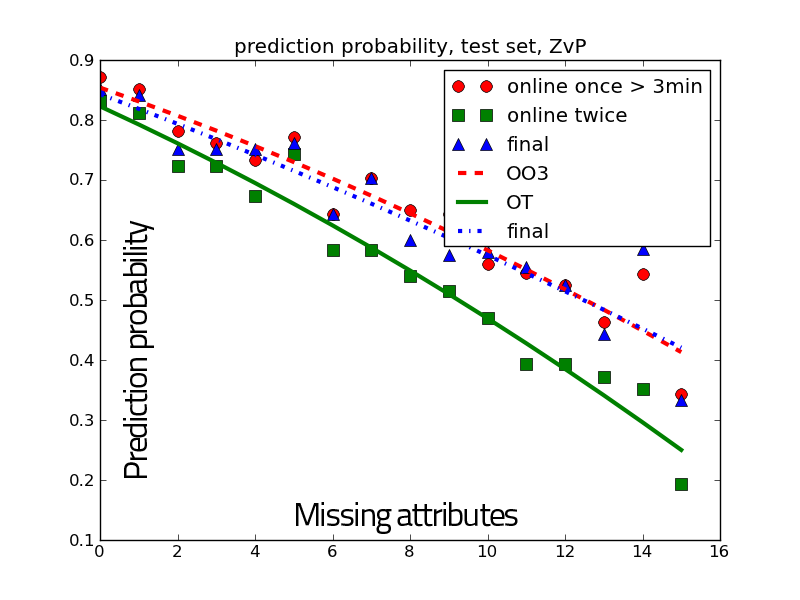

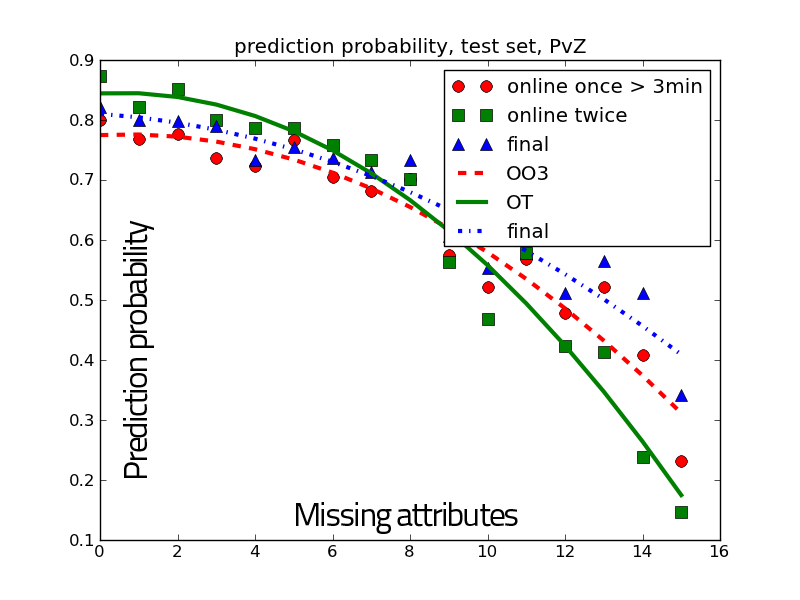

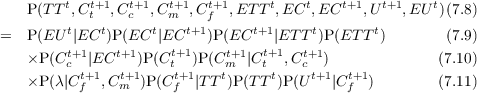

Chapter 7 shows our decompositions of strategy into specific prediction and

adaptation (under uncertainty) tasks. Our approach was to reduce the complexity of

strategies by using the structure of the game rules (technology trees) of expert

players vocable (openings) decisions (unit types combinations/proportions). From

partial observations, the probability distributions on the opponent’s strategy are

reconstructed, which allows for adaptation and decision-making.

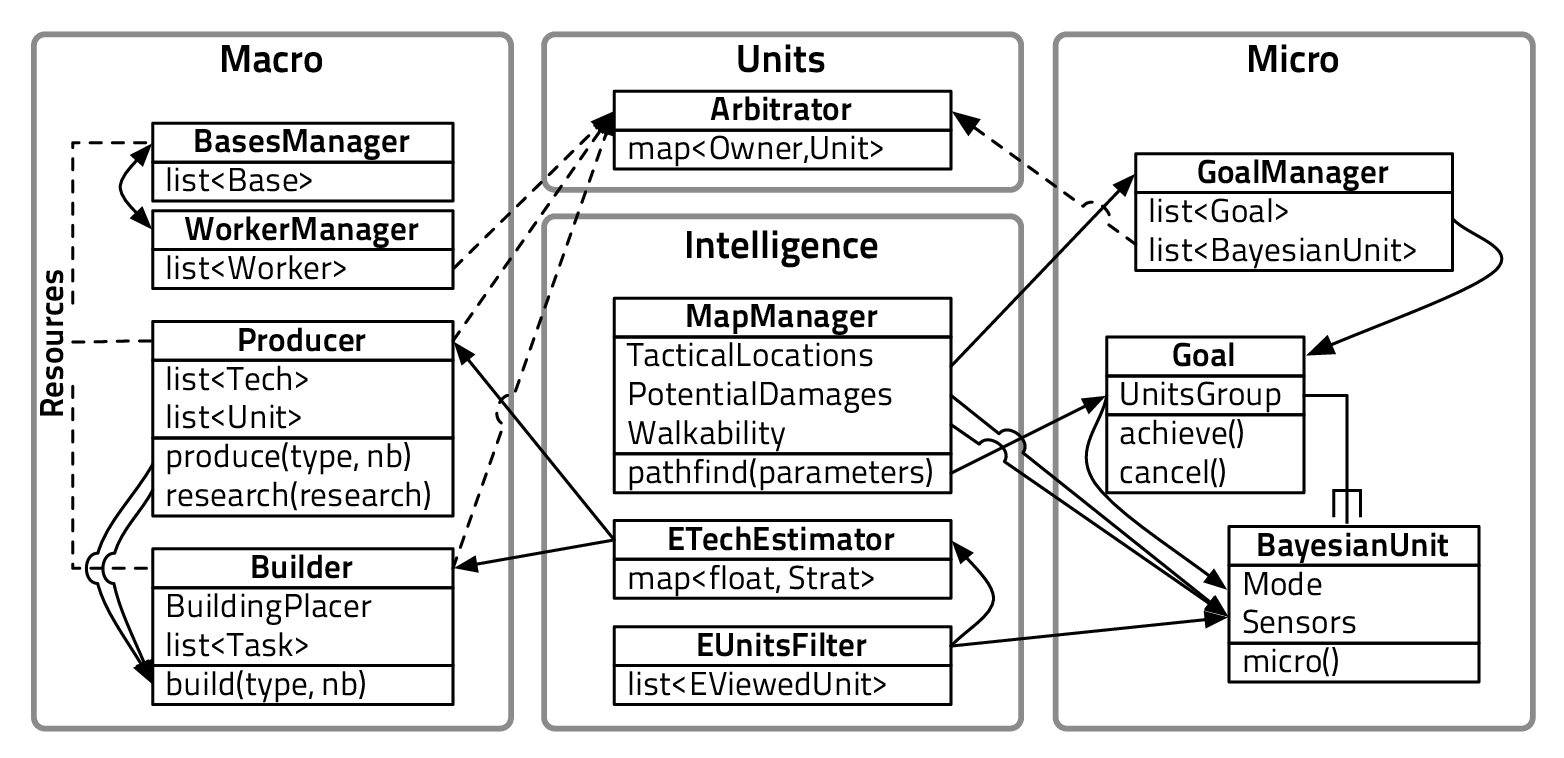

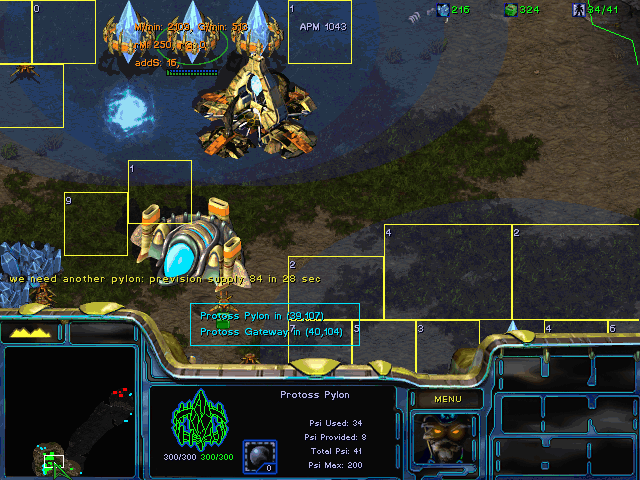

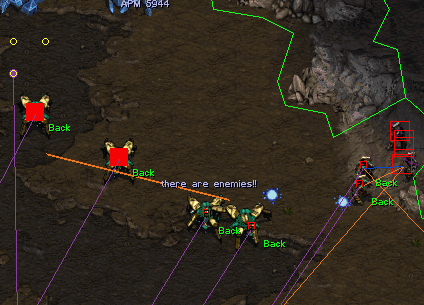

Chapter 8 describes briefly the software architecture of our robotic player (bot). It

makes the link between the Bayesian models presented before and their connection

with the bot’s program. We also comment some of the bot’s debug output to show

how a game played by our bot unfolds.

We conclude by putting the contributions back in their contexts, and opening up

several perspectives for future work in the RTS AI domain.

Chapter 2

Game AI

It is not that the games and mathematical problems are chosen because

they are clear and simple; rather it is that they give us, for the smallest

initial structures, the greatest complexity, so that one can engage some

really formidable situations after a relatively minimal diversion into

programming.

Marvin Minsky (Semantic Information Processing, 1968)

|

IS the primary goal of game AI to win the game? “Game AI” is simultaneously a

research topic and an industry standard practice, for which the main metric is the

fun the players are having. Its uses range from character animation, to behavior

modeling, strategic play, and a true gameplay* component. In this chapter, we will

give our educated guess about the goals of game AI, and review what exists for a

broad category of games: abstract strategy games, partial information and/or

stochastic games, different genres of computer games. Let us then focus on gameplay

(from a player point of view) characteristics of these games so that we can enumerate

game AI needs.

2.1 Goals of game AI

2.1.1 NPC

Non-playing characters (NPC*), also called “mobs”, represent a massive volume of

game AI, as a lot of multi-player games have NPC. They really represents players

that are not conceived to be played by humans, by opposition to “bots”, which

corresponds to human-playable characters controlled by an AI. NPC are

an important part of ever more immersive single player adventures (The

Elder Scrolls V: Skyrim), of cooperative gameplays* (World of Warcraft, Left

4 Dead), or as helpers or trainers (“pets”, strategy games). NPC can be

a core part of the gameplay as in Creatures or Black and White, or dull

“quest giving poles” as in a lot of role-playing games. They are of interest for

the game industry, but also for robotics, to study human cognition and for

artificial intelligence in the large. So, the first goal of game AI is perhaps

just to make the artificial world seem alive: a paint is not much fun to play

in.

2.1.2 Win

During the last decade, the video game industry has seen the emergence of “e-sport”.

It is the professionalizing of specific competitive games at the higher levels, as in

sports: with spectators, leagues, sponsors, fans and broadcasts. A list of major

electronic sport games includes (but is not limited to): StarCraft: Brood War,

Counter-Strike, Quake III, Warcraft III, Halo, StarCraft II. The first game to have

had pro-gamers* was StarCraft: Brood War*, in Korea, with top players

earning more than Korean top soccer players. Top players earn more than

$400,000 a year but the professional average is below, around $50-60,000 a

year [Contracts, 2007], against the average South Korean salary at $16,300

in 2010. Currently, Brood War is being slowly phased out to StarCraft II.

There are TV channels broadcasting Brood War (OnGameNet, previously

also MBC Game) or StarCraft II (GOM TV, streaming) and for which it

constitutes a major chunk of the air time. “E-sport” is important to the

subject of game AI because it ensures competitiveness of the human players. It

is less challenging to write a competitive AI for game played by few and

without competitions than to write an AI for Chess, Go or StarCraft. E-sport,

through the distribution of “replays*” also ensures a constant and heavy flow

of human player data to mine and learn from. Finally, cognitive science

researchers (like the Simon Fraser University Cognitive Science Lab) study the

cognitive aspects (attention, learning) of high level RTS playing [Simon Fraser

University].

Good human players, through their ability to learn and adapt, and through

high-level strategic reasoning, are still undefeated. Single players are often frustrated

by the NPC behaviors in non-linear (not fully scripted) games. Nowadays, video

games’ AI can be used as part of the gameplay as a challenge to the player. This is

not the case in most of the games though, in decreasing order of resolution of the

problem :

fast FPS* (first person shooters), team FPS, RPG* (role playing games),

MMORPG* (Massively Multi-player Online RPG), RTS* (Real-Time Strategy).

These games in which artificial intelligences do not beat top human players on equal

footing requires increasingly more cheats to even be a challenge (not for long as

they mostly do not adapt). AI cheats encompass (but are not limited to):

- RPG NPC often have at least 10 times more hit points (health points)

than their human counterparts in equal numbers,

- FPS bots can see through walls and use perfect aiming,

- RTS bots see through the “fog of war*” and have free additional resources.

How do we build game robotic players (“bots”, AI, NPC) which can provide some

challenge, or be helpful without being frustrating, while staying fun?

2.1.3 Fun

The main purpose of gaming is entertainment. Of course, there are game genres

like serious gaming, or the “gamification*” of learning, but the majority of

people playing games are having fun. Cheating AI are not fun, and so the

replayability* of single player games is very low. The vast majority of games

which are still played after the single player mode are multi-player games,

because humans are still the most fun partners to play with. So how do

we get game AI to be fun to play with? The answer seems to be 3-fold:

- For competitive and PvP* (players versus players) games: improve game

AI so that it can play well on equal footing with humans,

- for cooperative and PvE* (players vs environment) games: optimize the

AI for fun, “epic wins”: the empowerment of playing your best and just

barely winning,

- give the AI all the tools to adapt the game to the players: AI directors*

(as in Left 4 Dead* and Dark Spore*), procedural content generation (e.g.

automatic personalized Mario [Shaker et al., 2010]).

In all cases, a good AI should be able to learn from the players’ actions, recognize their

behavior to deal with it in the most entertaining way. Examples for a few mainstream

games: World of Warcraft instances or StarCraft II missions could be less predictable

(less scripted) and always “just hard enough”, Battlefield 3 or Call of Duty opponents

could have a longer life expectancy (5 seconds in some cases), Skyrim’s follower

NPC* could avoid blocking the player in doors, or going in front when they cast

fireballs.

2.1.4 Programming

How do game developers want to deal with game AI programming? We have to

understand the needs of industry game AI programmers:

- computational efficiency: most games are real-time systems, 3D graphics

are computationally intensive, as a result the AI CPU budget is low,

- game designers often want to remain in control of the behaviors, so game

AI programmers have to provide authoring tools,

- AI code has to scale with the state spaces while being debuggable: the

complexity of navigation added to all the possible interactions with the

game world make up for an interesting “possible states coverage and

robustness” problem,

- AI behaviors have to scale with the possible game states (which are not

all predictable due to the presence of the human player),

- re-use accross games (game independant logic, at least libraries).

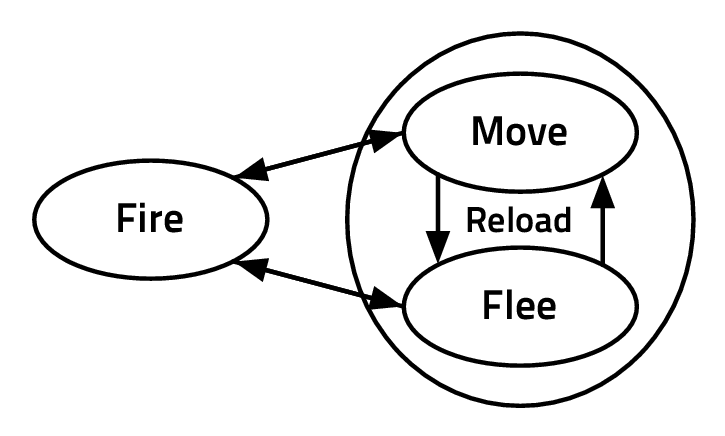

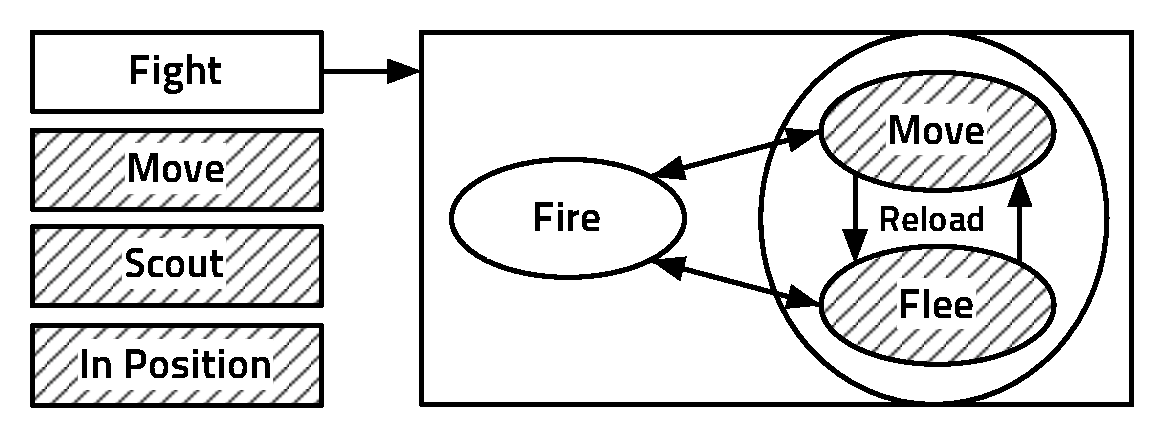

As a first approach, programmers can “hard code” the behaviors and their switches.

For some structuring of such states and transitions, they can and do use

state machines [Houlette and Fu, 2003]. This solution does not scale well

(exponential increase in the number of transitions), nor do they generate

autonomous behavior, and they can be cumbersome for game designers to

interact with. Hierarchical finite state machine (FSM)* is a partial answer to

these problems: they scale better due to the sharing of transitions between

macro-states and are more readable for game designers who can zoom-in on

macro/englobing states. They still represent way too much programming work for

complex behavior and are not more autonomous than classic FSM. Bakkes

et al. [2004] used an adaptive FSM mechanism inspired by evolutionary algorithms

to play Quake III team games. Planning (using a search heuristic in the

states space) efficiently gives autonomy to virtual characters. Planners like

hierarchical task networks (HTNs)* [Erol et al., 1994] or STRIPS [Fikes and

Nilsson, 1971] generate complex behaviors in the space of the combinations of

specified states, and the logic can be re-used accross games. The drawbacks

can be a large computational budget (for many agents and/or a complex

world), the difficulty to specify reactive behavior, and less (or harder) control

from the game designers. Behavior trees (Halo 2 [Isla, 2005], Spore) are a

popular in-between HTN* and hierarchical finite state machine (HFSM)*

technique providing scability through a tree-like hierarchy, control through

tree editing and some autonomy through a search heuristic. A transversal

technique for ease of use is to program game AI with a script (LUA, Python) or

domain specific language (DSL*). From a programming or design point of

view, it will have the drawbacks of the models it is based on. If everything

is allowed (low-level inputs and outputs directly in the DSL), everything

is possible at the cost of cumbersome programming, debugging and few

re-use.

Even with scalable

architectures like behavior trees or the autonomy that planning provides,

there are limitations (burdens on programmers/designers or CPU/GPU):

- complex worlds require either very long description of the state (in

propositional logic) or high expressivity (higher order logics) to specify

well-defined behaviors,

- the search space of possible actions increases exponentially with the

volume and complexity of interactions with the world, thus requiring ever

more efficient pruning techniques,

- once human players are in the loop (is it not the purpose of a game?),

uncertainty has to be taken into account. Previous approaches can be

“patched” to deal with uncertainty, but at what cost?

Our thesis is that we can learn complex behaviors from exploration or observations (of

human players) without the need to be explicitely programmed. Furthermore, the

game designers can stay in control by choosing which demonstration to learn from

and tuning parameters by hand if wanted. Le Hy et al. [2004] showed it in the case

of FPS AI (Unreal Tournament), with inverse programming to learn reactive

behaviors from human demonstration. We extend it to tactical and even strategic

behaviors.

2.2 Single-player games

Single player games are not the main focus of our thesis, but they present a few

interesting AI characteristics. They encompass all kinds of human cognitive abilities,

from reflexes to higher level thinking.

2.2.1 Action games

Platform games (Mario, Sonic), time attack racing games (TrackMania), solo

shoot-them-up (“schmups”, Space Invaders, DodonPachi), sports games and rhythm

games (Dance Dance Revolution, Guitar Hero) are games of reflexes, skill and

familiarity with the environment. The main components of game AI in these genres is

a quick path search heuristic, often with a dynamic environment. At the

Computational Intelligence and Games conferences series, there have been Mario

[Togelius et al., 2010], PacMan [Rohlfshagen and Lucas, 2011] and racing

competitions [Loiacono et al., 2008]: the winners often use (clever) heuristics coupled

with a search algorithm (A* for instance). As there are no human opponents,

reinforcement learning and genetic programming works well too. In action games, the

artificial player most often has a big advantage on its human counterpart as reaction

time is one of the key characteristics.

2.2.2 Puzzles

Point and click (Monkey Island, Kyrandia, Day of the Tentacle), graphic

adventure (Myst, Heavy Rain), (tile) puzzles (Minesweeper, Tetris) games

are games of logical thinking and puzzle solving. The main components

of game AI in these genres is an inference engine with sufficient domain

knowledge (an ontology). AI research is not particularly active in the genre of

puzzle games, perhaps because solving them has more to do with writing

down the ontology than with using new AI techniques. A classic well-studied

logic-based, combinatorial puzzle is Sudoku, which has been formulated as a

SAT-solving [Lynce and Ouaknine, 2006] and constraint satisfaction problem

[Simonis, 2005].

2.3 Abstract strategy games

2.3.1 Tic-tac-toe, minimax

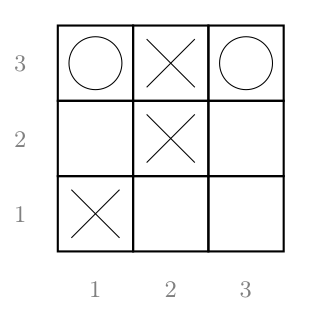

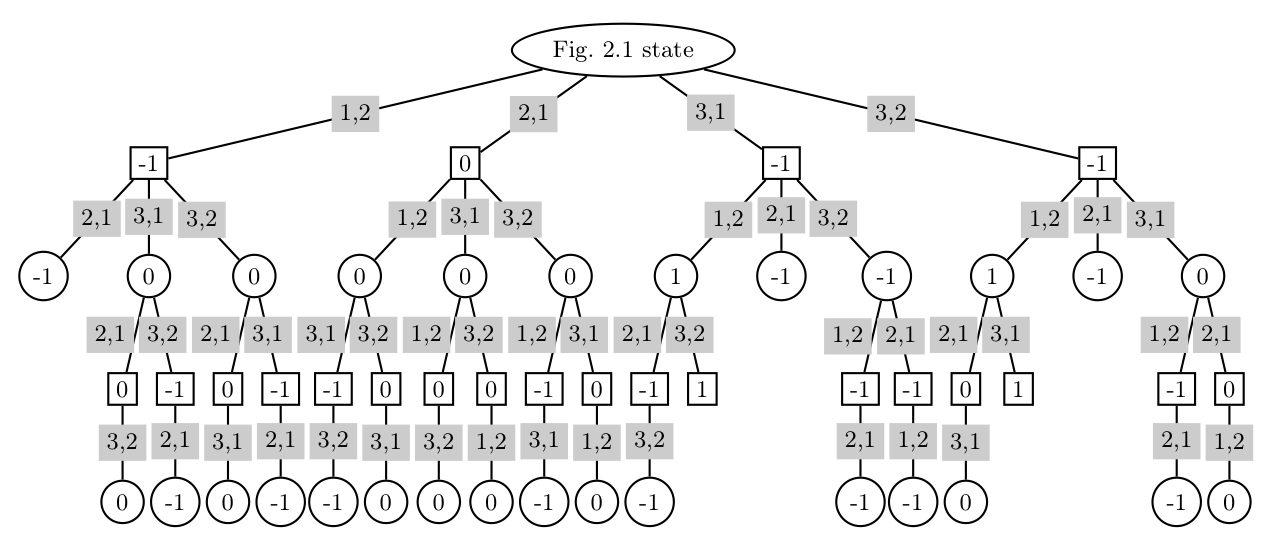

Tic-tac-toe (noughts and crosses) is a solved game*, meaning that it can be played

optimally from each possible position. How did it came to get solved? Each and every

possible positions (26,830) have been analyzed by a Minimax (or its variant

Negamax) algorithm. Minimax is an algorithm which can be used to determine the

optimal score a player can get for a move in a zero-sum game*. The Minimax

theorem states:

Theorem. For every two-person, zero-sum game with finitely many strategies,

there exists a value V and a mixed strategy for each player, such that (a) Given

player 2’s strategy, the best payoff possible for player 1 is V, and (b) Given

player 1’s strategy, the best payoff possible for player 2 is -V.

Applying this theorem to Tic-tac-toe, we can say that winning is +1 point for the

player and losing is -1, while draw is 0. The exhaustive search algorithm which takes

this property into account is described in Algorithm 1. The result of applying this

algorithm to the Tic-tac-toe situation of Fig. 2.1 is exhaustively represented in

Fig. 2.2. For zero-sum games (as abstract strategy games discussed here), there is a

(simpler) Minimax variant called Negamax, shown in Algorithm 7 in Appendix A.

Algorithm 1: Minimax algorithm

function MINI(depth)

if depth ≤ 0 then

return -value()

end if

min ← +∞

for all possible moves do

score ← maxi(depth - 1)

if score < min then

min ← score

end if

end for

return min

end function

function MAXI(depth)

if depth ≤ 0 then

return value()

end if

max ←-∞

for all possible moves do

score ← mini(depth - 1)

if score > min then

max ← score

end if

end for

return max

end function

2.3.2 Checkers, alpha-beta

Checkers, Chess and Go are also zero sum, perfect-information*, partisan*,

deterministic strategy game. Theoretically, they all can be solved by exhaustive

Minimax. In practice though, it is often intractable: their bounded versions are at

least in PSPACE and their unbounded versions are EXPTIME-hard [Hearn and

Demaine, 2009]. We can see the complexity of Minimax as O(bd) with b the average

branching factor* of the tree (to search) and d the average length (depth) of the

game. For Checkers b ≈ 8, but taking pieces is mandatory, resulting in a

mean adjusted branching factor of ≈ 4, while the mean game length is 70

resulting in a game tree complexity of ≈ 1031 [Allis, 1994]. It is already too

large to have been solved by Minimax alone (on current hardware). From

1989 to 2007, there were artificial intelligence competitions on Checkers, all

using at least alpha-beta pruning: a technique to make efficient cuts in the

Minimax search tree while not losing optimality. The state space complexity of

Checkers is the smallest of the 3 above-mentioned games with ≈ 5.1020 legal

possible positions (conformations of pieces which can happen in games). As a

matter of fact, Checkers have been (weakly) solved, which means it was

solved for perfect play on both sides (and always ends in a draw) [Schaeffer

et al., 2007a]. Not all positions resulting from imperfect play have been

analyzed.

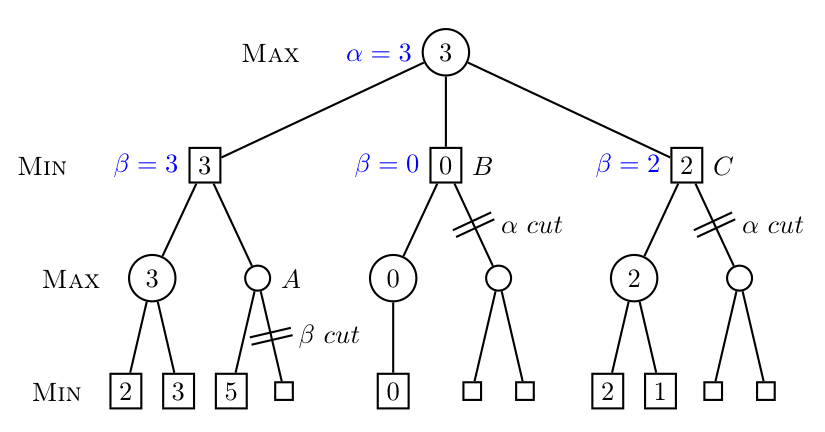

Alpha-beta pruning (see Algorithm 2) is a branch-and-bound algorithm which

can reduce Minimax search down to a O(bd∕2) = O( ) complexity if the best nodes

are searched first (O(b3d∕4) for a random ordering of nodes). α is the maximum

score that we (the maximizing player) are assured to get given what we

already evaluated, while β is the minimum score that the minimizing player is

assured to get. When evaluating more and more nodes, we can only get

a better estimate and so α can only increase while β can only decrease.

) complexity if the best nodes

are searched first (O(b3d∕4) for a random ordering of nodes). α is the maximum

score that we (the maximizing player) are assured to get given what we

already evaluated, while β is the minimum score that the minimizing player is

assured to get. When evaluating more and more nodes, we can only get

a better estimate and so α can only increase while β can only decrease.

Algorithm 2: Alpha-beta algorithm

function ALPHABETA(node,depth,α,β,player)

if depth ≤ 0 then

return value(player)

end if

if player == us then

for all possible moves do

α ← max(α,alphabeta(child,depth - 1,α,β,opponent))

if β ≤ α then

break

end if

end for

return α

else

for all possible moves do

β ← min(β,alphabeta(child,depth - 1,α,β,us))

if β ≤ α then

break

end if

end for

return β

end if

end function

If we find a node for which β becomes less than α, it means that this position

results from sub-optimal play. When it is because of an update of β, the sub-optimal

play is on the side of the maximizing player (his α is not high enough to be optimal

and/or the minimizing player has a winning move faster in the current sub-tree) and

this situation is called an α cut-off. On the contrary, when the cut results from an

update of α, it is called a β cut-off and means that the minimizing player would have

to play sub-optimally to get into this sub-tree. When starting the game, α is

initialized to -∞ and β to +∞. A worked example is given on Figure 2.3.

Alpha-beta is going to be helpful to search much deeper than Minimax in the

same allowed time. The best Checkers program (since the 90s), which is also the

project which solved Checkers [Schaeffer et al., 2007b], Chinook, has opening and

end-game (for lest than eight pieces of fewer) books, and for the mid-game (when

there are more possible moves) relies on a deep search algorithm. So, apart for

the beginning and the ending of the game, for which it plays by looking

up a database, it used a search algorithm. As Minimax and Alpha-beta

are depth first search heuristics, all programs which have to answer in a

fixed limit of time use iterative deepening. It consists in fixing limited depth

which will be considered maximal and evaluating this position. As it does not

relies in winning moves at the bottom, because the search space is too big in

branchingdepth, we need moves evaluation heuristics. We then iterate on growing

the maximal depth for which we consider moves, but we are at least sure

to have a move to play in a short time (at least the greedy depth 1 found

move).

2.3.3 Chess, heuristics

With a branching factor* of ≈ 35 and an average game length of 80 moves

[Shannon, 1950], the average game-tree complexity of chess is 3580 ≈ 3.10123.

Shannon [1950] also estimated the number of possible (legal) positions to be of the

order of 1043, which is called the Shannon number. Chess AI needed a little more

than just Alpha-beta to win against top human players (not that Checkers could not

benefit it), particularly on 1996 hardware (first win of a computer against a reigning

world champion, Deep Blue vs. Garry Kasparov). Once an AI has openings and

ending books (databases to look-up for classical moves), how can we search deeper

during the game, or how can we evaluate better a situation? In iterative deepening

Alpha-beta (or other search algorithms like Negascout [Reinefeld, 1983] or

MTD-f[Plaat, 1996]), one needs to know the value of a move at the maximal

depth. If it does not correspond to the end of the game, there is a need for an

evaluation heuristic. Some may be straight forward, like the resulting value

of an exchange in pieces points. But some strategies sacrifice a queen in

favor of a more advantageous tactical position or a checkmate, so evaluation

heuristics need to take tactical positions into account. In Deep Blue, the

evaluation function had 8000 cases, with 4000 positions in the openings

book, all learned from 700,000 grandmaster games [Campbell et al., 2002].

Nowadays, Chess programs are better than Deep Blue and generally also search

less positions. For instance, Pocket Fritz (HIARCS engine) beats current

grandmasters [Wikipedia, Center] while evaluating 20,000 positions per second (740

MIPS on a smartphone) against Deep Blue’s (11.38 GFlops) 200 millions per

second.

2.3.4 Go, Monte-Carlo tree search

With an estimated number of legal 19x19 Go positions of 2.081681994 * 10170

[Tromp and Farnebäck, 2006] (1.196% of possible positions), and an average

branching factor* above Chess for gobans* from 9x9 and above, Go sets

another limit for AI. For 19x19 gobans, the game tree complexity is up to

10360 [Allis, 1994]. The branching factor varies greatly, from ≈ 30 to 300

(361 cases at first), while the mean depth (number of plies in a game) is

between 150 to 200. Approaches other than systematic exploration of the game

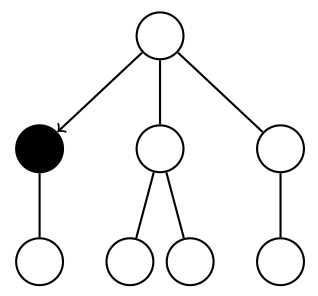

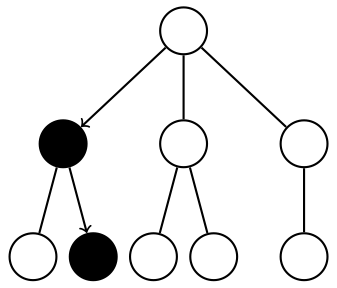

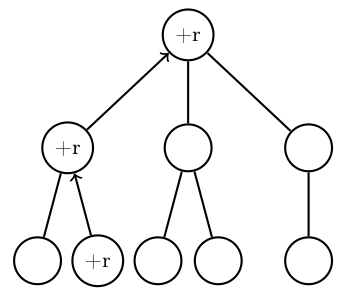

tree are required. One of them is Monte Carlo Tree Search (MCTS*). Its

principle is to randomly sample which nodes to expand and which to exploit in

the search tree, instead of systematically expanding the build tree as in

Minimax. For a given node in the search tree, we note Q(node) the sum of the

simulations rewards on all the runs through node, and N(node) the visits count of

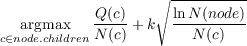

node. Algorithm 3 details the MCTS algorithm and Fig. 2.4 explains the

principle.

Algorithm 3: Monte-Carlo Tree Search algorithm. EXPANDFROM(node) is the

tree (growing) policy function on how to select where to search from situation

node (exploration or exploitation?) and how to expand the game tree (deep-first,

breadth-first, heuristics?) in case of untried actions. EVALUATE(tree) may have

2 behaviors: 1. if tree is complete (terminal), it gives an evaluation according to

games rules, 2. if tree is incomplete, it has to give an estimation, either through

simulation (for instance play at random) or an heuristic. BESTCHILD picks the

action that leads to the better value/reward from node. MERGE(node,tree)

changes the existing tree (with node) to take all the Q(ν)∀ν ∈ tree (new) values

into account. If tree contains new nodes (there were some exploration), they are

added to node at the right positions.

function MCTS(node)

while computational time left do

tree ← EXPANDFROM(node)

tree.values ← EVALUATE(tree)

MERGE(node,tree)

end while

return BESTCHILD(node)

end function

The MoGo team [Gelly and Wang, 2006, Gelly et al., 2006, 2012] introduced

the use of Upper Confidence Bounds for Trees (UCT*) for MCTS* in Go AI. MoGo

became the best 9x9 and 13x13 Go program, and the first to win against a pro on

9x9. UCT specializes MCTS in that it specifies EXPANDFROM (as in Algorithm. 4)

tree policy with a specific exploration-exploitation trade-off. UCB1 [Kocsis and

Szepesvári, 2006] views the tree policy as a multi-armed bandit problem and so

EXPANDFROM(node) UCB1 chooses the arms with the best upper confidence

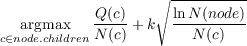

bound:

in

which k fixes the exploration-exploitation trade-off:

in

which k fixes the exploration-exploitation trade-off:  is simply the average reward

when going through c so we have exploitation only for k = 0 and exploration only for

k = ∞.

is simply the average reward

when going through c so we have exploitation only for k = 0 and exploration only for

k = ∞.

Kocsis and Szepesvári [2006] showed that the probability of selecting sub-optimal

actions converges to zero and so that UCT MCTS converges to the minimax tree and

so is optimal. Empirically, they found several convergence rates of UCT to be in

O(bd∕2), as fast as Alpha-beta tree search, and able to deal with larger problems

(with some error). For a broader survey on MCTS methods, see [Browne

et al., 2012].

With Go, we see clearly that humans do not play abstract strategy games using

the same approach. Top Go players can reason about their opponent’s move, but they

seem to be able to do it in a qualitative manner, at another scale. So, while tree

search algorithms help a lot for tactical play in Go, particularly by integrating

openings/ending knowledge, pattern macthing algorithms are not yet at the

strategical level of human players. When a MCTS algorithm learns something, it

stays at the level of possible actions (even considering positional hashing*), while the

human player seems to be able to generalize, and re-use heuristics learned at another

level.

2.4 Games with uncertainty

An exhaustive list of games, or even of games genres, is beyond the scope/range of

this thesis. All uncertainty boils down to incompleteness of information, being it

the physics of the dice being thrown or the inability to measure what is

happening in the opponent’s brain. However, we will speak of 2 types (sources) of

uncertainty: extensional uncertainty, which is due to incompleteness in direct,

measurable information, and intentional uncertainty, which is related to

randomness in the game or in (the opponent’s) decisions. Here are two extreme

illustrations of this: an agent acting without sensing is under full extensional

uncertainty, while an agent whose acts are the results of a perfect random

generator is under full intentional uncertainty. The uncertainty coming from the

opponent’s mind/cognition lies in between, depending on the simplicity to

model the game as an optimization procedure. The harder the game is to

model, the harder it is to model the trains of thoughts our opponents can

follow.

2.4.1 Monopoly

In Monopoly, there is few hidden information (Chance and Community

Chest cards only), but there is randomness in the throwing of

dice ,

and a substantial influence of the player’s knowledge of the game. A very basic

playing strategy would be to just look at the return on investment (ROI) with regard

to prices, rents and frequencies, choosing what to buy based only on the money you

have and the possible actions of buying or not. A less naive way to play should

evaluate the questions of buying with regard to what we already own, what others

own, our cash and advancement in the game. The complete state space is huge

(places for each players × their money × their possessions), but according to Ash

and Bishop [1972], we can model the game for one player (as he has no

influence on the dice rolls and decisions of others) as a Markov process on 120

ordered pairs: 40 board spaces × possible number of doubles rolled so far in

this turn (0, 1, 2). With this model, it is possible to compute more than

simple ROI and derive applicable and interesting strategies. So, even in

Monopoly, which is not lottery playing or simple dice throwing, a simple

probabilistic modeling yields a robust strategy. Additionally, Frayn [2005]

used genetic algorithms to generate the most efficient strategies for portfolio

management.

Monopoly is an example of a game in which we have complete direct information

about the state of the game. The intentional uncertainty due to the roll of the dice

(randomness) can be dealt with thanks to probabilistic modeling (Markov processes

here). The opponent’s actions are relatively easy to model due to the fact that

the goal is to maximize cash and that there are not many different efficient

strategies (not many Nash equilibrium if it were a stricter game) to attain it. In

general, the presence of chance does not invalidate previous (game theoretic /

game trees) approaches but transforms exact computational techniques into

stochastic ones: finite states machines become probabilistic Bayesian networks for

instance.

2.4.2 Battleship

Battleship (also called “naval combat”) is a guessing game generally played with two

10 × 10 grids for each players: one is the player’s ships grid, and one is to

remember/infer the opponent’s ships positions. The goal is to guess where the enemy

ships are and sink them by firing shots (torpedoes). There is incompleteness of

information but no randomness. Incompleteness can be dealt with with probabilistic

reasoning. The classic setup of the game consist in two ships of length 3 and one ship

of each lengths of 2, 4 and 5; in this setup, there are 1,925,751,392 possible

arrangements for the ships. The way to take advantage of all possible information is

to update the probability that there is a ship for all the squares each time we have

additional information. So for the 10 × 10 grid we have a 10 × 10 matrix

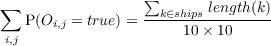

O1:10,1:10 with Oi,j ∈{true,false} being the ith row and jth column random

variable of the case being occupied. With ships being unsunk ships, we always

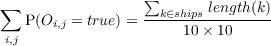

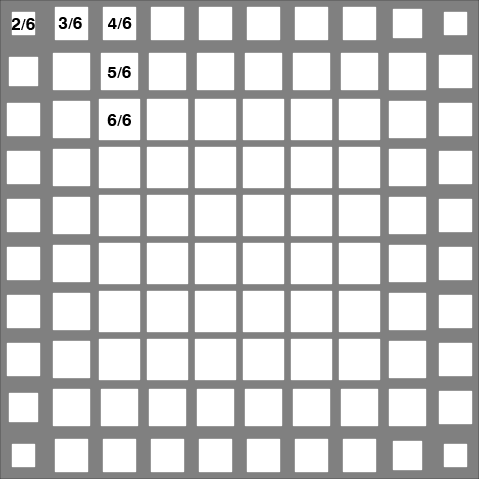

have:

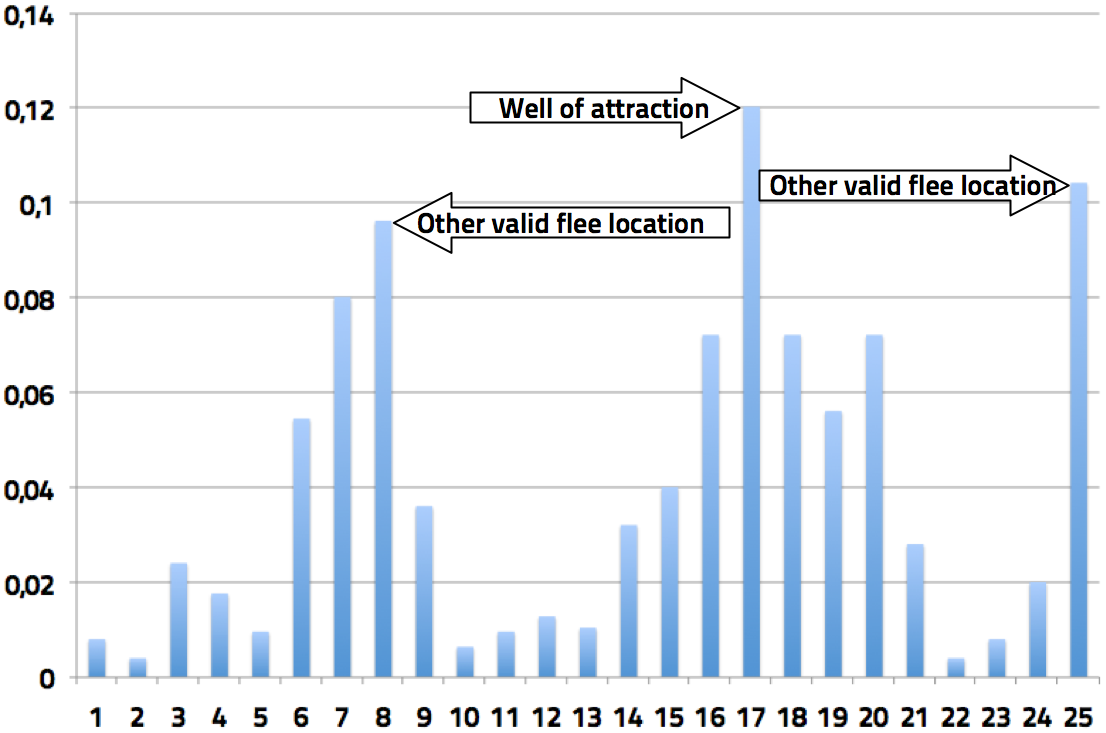

For instance for a ship of size 3 alone at the beginning we can have prior

distributions on O1:10,1:10 by looking at combinations of its placements (see

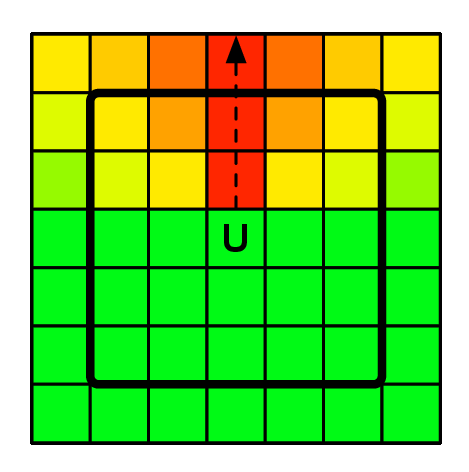

Fig. 2.5). We can also have priors on where the opponent likes to place her

ships. Then, in each round, we will either hit or miss in i,j. When we hit,

we know P(Oi,j = true) = 1.0 and will have to revise the probabilities of

surrounding areas, and everywhere if we learned the size of the ship, with possible

placement of ships. If we did not sunk a ship, the probabilities of uncertain (not

0.0 or 1.0) positions around i,j will be highered according to the sizes of

remaining ships. If we miss, we know P([Oi,j = false]) = 1.0 and can also revise

(lower) the surrounding probabilities, an example of that effect is shown in

Fig. 2.5.

For instance for a ship of size 3 alone at the beginning we can have prior

distributions on O1:10,1:10 by looking at combinations of its placements (see

Fig. 2.5). We can also have priors on where the opponent likes to place her

ships. Then, in each round, we will either hit or miss in i,j. When we hit,

we know P(Oi,j = true) = 1.0 and will have to revise the probabilities of

surrounding areas, and everywhere if we learned the size of the ship, with possible

placement of ships. If we did not sunk a ship, the probabilities of uncertain (not

0.0 or 1.0) positions around i,j will be highered according to the sizes of

remaining ships. If we miss, we know P([Oi,j = false]) = 1.0 and can also revise

(lower) the surrounding probabilities, an example of that effect is shown in

Fig. 2.5.

Battleship is a game with few intensional uncertainty (no randomness),

particularly because the goal quite strongly conditions the action (sink ships as fast

as possible). However, it has a large part of extensional uncertainty (incompleteness

of direct information). This incompleteness of information goes down rather quickly

once we act, particularly if we update a probabilistic model of the map/grid. If we

compare Battleship to a variant in which we could see the adversary board, playing

would be straightforward (just hit ships we know the position on the board), now in

real Battleship we have to model our uncertainty due to the incompleteness of

information, without even beginning to take into account the psychology

of the opponent in placement as a prior. The cost of solving an imperfect

information game increases greatly from its perfect information variant: it seems to

be easier to model stochasticity (or chance, a source of randomness) than

to model a hidden (complex) system for which we only observe (indirect)

effects.

2.4.3 Poker

Poker

is a zero-sum (without the house’s cut), imperfect information and stochastic

betting game. Poker “AI” is as old as game theory [Nash, 1951], but the

research effort for human-level Poker AI started in the end of the 90s. The

interest for Poker AI is such that there are annual AAAI computer Poker

competitions .

Billings et al. [1998] defend Poker as an interesting game for decision-making

research, because the task of building a good/high level Poker AI (player) entails to

take decisions with incomplete information about the state of the game, incomplete

information about the opponents’ intentions, and model their thoughts process to be

able to bluff efficiently. A Bayesian network can combine these uncertainties and

represent the player’s hand, the opponents’ hands and their playing behavior

conditioned upon the hand, as in [Korb et al., 1999]. A simple “risk evaluating” AI

(folding and raising according to the outcomes of its hands) will not prevail

against good human players. Bluffing, as described by Von Neumann and

Morgenstern [1944] “to create uncertainty in the opponent’s mind”, is an element of

Poker which needs its own modeling. Southey et al. [2005] also give a Bayesian

treatment to Poker, separating the uncertainty resulting from the game (draw of

cards) and from the opponents’ strategies, and focusing on bluff. From a

game theoretic point of view, Poker is a Bayesian game*. In a Bayesian

game, the normal form representation requires describing the strategy spaces,

type spaces, payoff and belief functions for each player. It maps to all the

possible game trees along with the agents’ information state representing

the probabilities of individual moves, called the extensive form. Both these

forms scale very poorly (exponentially). Koller and Pfeffer [1997] used the

sequence form transformation, the set of realization weights of the sequences of

moves ,

to search over the space of randomized strategies for Bayesian games automatically.

Unfortunately, strict game theoretic optimal strategies for full-scale Poker are still

not tractable this way, two players Texas Hold’em having a state space ≈ O(1018).

Billings et al. [2003] approximated the game-theoretic optimal strategies

through abstraction and are able to beat strong human players (not world-class

opponents).

Poker is a game with both extensional and intentional uncertainty, from the fact

that the opponents’ hands are hidden, the chance in the draw of the cards, the

opponents’ model about the game state and their model about our mental state(s)

(leading our decision(s)). While the iterated reasoning (“if she does A, I

can do B”) is (theoretically) finite in Chess due to perfect information, it is

not the case in Poker (“I think she thinks I think...”). The combination of

different sources of uncertainty (as in Poker) makes it complex to deal with it

(somehow, the sources of uncertainty must be separated), and we will see

that both these sources of uncertainties arise (at different levels) in video

games.

2.5 FPS

2.5.1 Gameplay and AI

First person shooters gameplay* consist in controlling an agent in first person

view, centered on the weapon, a gun for instance. The firsts FPS popular

enough to bring the genre its name were Wolfeinstein 3D and Doom, by iD

Software. Other classic FPS (series) include Duke Nukem 3D, Quake, Half-Life,

Team Fortress, Counter-Strike, Unreal Tournament, Tribes, Halo, Medal of

Honor, Call of Duty, Battlefield, etc. The distinction between “fast FPS”

(e.g. Quake series, Unreal Tournament series) and others is made on the

speed at which the player moves. In “fast FPS”, the player is always jumping,

running much faster and playing more in 3 dimensions than on discretely

separate 2D ground planes. Game types include (but are not limited to):

- single-player missions, depending of the game design.

- capture-the-flag (CTF), in which a player has to take the flag inside the

enemy camp and bring it back in her own base.

- free-for-all (FFA), in which there are no alliances.

- team deathmatch (TD), in which two (or more) teams fight on score.

- various gather and escort (including hostage or payload modes), in which

one team has to find and escort something/somebody to another location.

- duel/tournament/deathmatch, 1 vs 1 matches (mainly “fast FPS”).

From these various game types, the player has to maximize its damage (or positive

actions) output while staying alive. For that, she will navigate her avatar in an

uncertain environment (partial information and other players intentions) and shoot

(or not) at targets with specific weapons.

Some games allow for instant (or delayed, but asynchronous to other players)

respawn (recreation/rebirth of a player), most likely in the “fast FPS” (Quake-like)

games, while in others, the player has to wait for the end of the round to respawn. In

some games, weapons, ammunitions, health, armor and items can be picked on the

ground (mainly “fast FPS”), in others, they are fixed at the start or can be

bought in game (with points). The map design can make the gameplay vary

a lot, between indoors, outdoors, arena-like or linear maps. According to

maps and gameplay styles, combat may be well-prepared with ambushes,

sniping, indirect (zone damages), or close proximity (even to fist weapons).

Most often, there are strong tactical positions and effective ways to attack

them.

While “skill” (speed of the movements, accuracy of the shots) is easy to emulate

for an AI, team-play is much harder for bots and it is always a key ability. Team-play

is the combination of distributed evaluation of the situation, planning and

distribution of specialized tasks. Very high skill also requires integrating over enemy’s

tactical plans and positions to be able to take indirect shots (grenades for instance)

or better positioning (coming from their back), which is hard for AI too. An example

of that is that very good human players consistently beat the best bots (nearing

100% accuracy) in Quake III (which is an almost pure skill “fast FPS”), because they

take advantage of being seen just when their weapons reload or come from

their back. Finally, bots which equal the humans by a higher accuracy are

less fun to play with: it is a frustrating experience to be shot across the

map, by a bot which was stuck in the door because it was pushed out of its

trail.

2.5.2 State of the art

FPS AI consists in controlling an agent in a complex world: it can have to

walk, run, crouch, jump, swim, interact with the environment and tools, and

sometimes even fly. Additionally, it has to shoot at moving, coordinated and

dangerous, targets. On a higher level, it may have to gather weapons, items or

power-ups (health, armor, etc.), find interesting tactical locations, attack, chase,

retreat...

The Quake III AI is a standard for Quake-like games [van Waveren and

Rothkrantz, 2002]. It consists in a layered architecture (hierarchical) FSM*. At the

sensory-motor level, it has an Area Awareness System (AAS) which detects collisions,

accessibility and computes paths. The level above provides intelligence for chat,

goals (locations to go to), and weapons selection through fuzzy logic. Higher

up, there are the behavior FSM (“seek goals”, chase, retreat, fight, stand...)

and production rules (if-then-else) for squad/team AI and orders. A team

of bots always behaves following the orders of one of the bots. Bots have

different natures and tempers, which can be accessed/felt by human players,

specified by fuzzy relations on “how much the bot wants to do, have, or

use something”. A genetic algorithm was used to optimize the fuzzy logic

parameters for specific purposes (like performance). This bot is fun to play

against and is considered a success, however Quake-like games makes it easy

to have high level bots because very good players have high accuracy (no

fire spreading), so they do not feel cheated if bots have a high accuracy

too. Also, the game is mainly indoors, which facilitates tactics and terrain

reasoning. Finally, cooperative behaviors are not very evolved and consist

in acting together towards a goal, not with specialized behaviors for each

agent.

More recent FPS games have dealt with these limitations by using combinations

of STRIPS planning (F.E.A.R. [Orkin, 2006]), HTN* (Killzone 2 [Champandard

et al., 2009] and ArmA [van der Sterren, 2009]), Behavior trees (Halo 2

[Isla, 2005]). Left4Dead (a cooperative PvE FPS) uses a global supervisor of the

AI to set the pace of the threat to be the most enjoyable for the player

[Booth, 2009].

In research, Laird [2001] focused on learning rules for opponent modeling,

planning and reactive planning (on Quake), so that the robot builds plan by

anticipating the opponent’s actions. Le Hy et al. [2004], Hy [2007] used

robotics inspired Bayesian models to quickly learn the parameters from human

players (on Unreal Tournament). Zanetti and Rhalibi [2004] and Westra and

Dignum [2009] applied evolutionary neural networks to optimize Quake

III bots. Predicting opponents positions is a central task to believability

and has been solved successfully using particle filters [Bererton, 2004] and

other models (like Hidden Semi-Markov Models) [Hladky and Bulitko, 2008].

Multi-objective neuro-evolution [Zanetti and Rhalibi, 2004, Schrum et al., 2011]

combines learning from human traces with evolutionary learning for the

structure of the artificial neural network, and leads to realistic (human-like)

behaviors, in the context of the BotPrize challenge (judged by humans)

[Hingston, 2009].

2.5.3 Challenges

Single-player FPS campaigns immersion could benefit from more realistic bots and

clever squad tactics. Multi-player FPS are competitive games, and a better game AI

should focus on:

- believability requires the AI to take decisions with the same informations

than the human player (fairness) and to be able to deal with unknown

situation.

- surprise and unpredictability is key for both performance and the

long-term interest in the human player in the AI.

- performance, to give a challenge to human players, can be achieved

through cooperative, planned and specialized behaviors.

2.6 (MMO)RPG

2.6.1 Gameplay and AI

Inspired directly by tabletop and live action role-playing games (Dungeon &

Dragons) as new tools for the game masters, it is quite natural for the RPG to have

ended up on computers. The firsts digital RPG were text (Wumpus) or

ASCII-art (Rogue, NetHack) based. The gameplay evolved considerably

with the technique. Nowadays, what we will call a role playing game (RPG)

consist in the incarnation by the human player of an avatar (or a team of

avatars) with a class: warrior, wizard, rogue, priest, etc., having different skills,

spells, items, health points, stamina/energy/mana (magic energy) points.

Generally, the story brings the player to solve puzzles and fight. In a fight,

the player has to take decisions about what to do, but skill plays a lesser

role in performing the action than in a FPS game. In a FPS, she has to

move the character (egocentrically) and aim to shoot; in a RPG, she has

to position itself (often way less precisely and continually) and just decide

which ability to use on which target (or a little more for “action RPG”). The

broad category of RPG include: Fallout, The Elders Scrolls (from Arena

to Skyrim), Secret of Mana, Zelda, Final Fantasy, Diablo, Baldur’s Gate.

A MMORPG (e.g. World of Warcraft, AION or EVE Online) consist in

a role-playing game in a persistent, multi-player world. There usually are

players-run factions fighting each others’ (PvP) and players versus environment

(PvE) situations. PvE* may be a cooperative task in which human players

fight together against different NPC, and in which the cooperation is at the

center of the gameplay. PvP is also a cooperative task, but more policy and

reactions-based than a trained and learned choregraphy as for PvE. We can

distinguish three types (or modality) of NPC which have different game AI needs:

- world/neutral/civilian NPC: gives quests, takes part in the world’s or

game’s story, talks,

- “mob”/hostile NPC that the player will fight,

- “pets”/allied NPC: acts by the players’ sides.

- persistent character AI could maintain the players’ avatars in the world

when they are disconnected.

NPC acting strangely are sometimes worse for the player’s immersion than immobile and

dull ones. However, it is more fun for the player to battle with hostile NPC which are

not too dumb or predictable. Players really expect allied NPC to at least not

hinder them, and it is even better when they adapt to what the player is

doing.

2.6.2 State of the art

Methods used in FPS* are also used in RPG*. The needs are sometimes a little

different for RPG games: for instance RPG need interruptible behaviors, behaviors

that can be stopped and resumed (dialogs, quests, fights...) that is. RPG also require

stronger story-telling capabilities than other gameplay genres, for which they use

(logical) story representations (ontologies) [Kline, 2009, Riedl et al., 2011].

Behavior multi-queues [Cutumisu and Szafron, 2009] resolve the problems of having

resumable, collaborative, real-time and parallel behavior, and tested their approach

on Neverwinter Nights. Basically they use prioritized behavior queues which can be

inserted (for interruption and resumption) in each others. AI directors are control

programs tuning the difficulty and pace of the game session in real-time from

player’s metrics. Kline [2011] used an AI director to adapt the difficulty of

Dark Spore to the performance (interactions and score) of the player in

real-time.

2.6.3 Challenges

There are mainly two axes for RPG games to bring more fun: interest in the game

play(s), and immersion. For both these topics, we think game AI can bring a lot:

- believability of the agents will come from AI approaches than can deal

with new situations, being it because they were not dealt with during

game development (because the “possible situations” space is too big) or

because they were brought by the players’ unforeseeable actions. Scripts

and strict policies approaches will be in difficulty here.

- interest (as opposed to boredom) for the human players in the game style

of the AI will come from approaches which can generate different behaviors

in a given situation. Expectable AI particularly affects replayability

negatively.

- performance relative to the gameplay will come from AI approaches

than can fully deal with cooperative behavior. One solution is to design

mobs to be orders of magnitude stronger (in term of hit points and

damages) than players’ characters, or more numerous. Another, arguably

more entertaining, solution is to bring the mobs behavior to a point where

they are a challenge for the team of human players.

Both believability and performance require to deal with uncertainty of the game

environment. RPG AI problem spaces are not tractable for a frontal (low-level)

search approach nor are there few enough situations to consider to just write a bunch

of script and puppeteer artificial agents at any time.

2.7 RTS Games

As RTS are the central focus on this thesis, we will discuss specific problems and

solution more in depth in their dedicated chapters, simply brushing here the

underlying major research problems. Major RTS include the Command&Conquer,

Warcraft, StarCraft, Age of Empires and Total Annihilation series.

2.7.1 Gameplay and AI

RTS gameplay consist in gathering resources, building up an economic and military

power through growth and technology, to defeat your opponent by destroying his

base, army and economy. It requires dealing with strategy, tactics, and units

management (often called micro-management) in real-time. Strategy consist in

what will be done in the long term as well as predicting what the enemy is

doing. It particularly deals with the economy/army trade-off estimation, army

composition, long-term planning. The three aggregate indicators for strategy are

aggression, production, and technology. The tactical aspect of the gameplay is

dominated by military moves: when, where (with regard to topography and weak

points), how to attack or defend. This implies dealing with extensional (what

the invisible units under “fog of war*” are doing) and intentional (what

will the visible enemy units do) uncertainty. Finally, at the actions/motor

level, micro-management is the art of maximizing the effectiveness of the

units i.e. the damages given/damages received ratio. For instance: retreat

and save a wounded unit so that the enemy units would have to chase it

either boosts your firepower or weakens the opponent’s. Both [Laird and van

Lent, 2001] and Gunn et al. [2009] propose that RTS AI is one of the most

challenging genres, because all levels in the hierarchy of decisions are of

importance.

More will be said about RTS in the dedicated chapter 4.

2.7.2 State of the art & challenges

Buro [2004] called for AI research in RTS games and identified the technical

challenges as:

- adversarial planning under uncertainty, and for that abstractions

have to be found both allowing to deal with partial observations and to

plan in real-time.

- learning and opponent modeling: adaptability plays a key role in the

strength of human players.

- spatial and temporal reasoning: tactics using the terrain features and

good timings are essential for higher level play.

To these challenges, we would like to add the difficulty of inter-connecting all special

cases resolutions of these problems: both for the collaborative (economical and

logistical) management of the resources, and for the sharing of uncertainty

quantization in the decision-making processes. Collaborative management of the

resources require arbitrage between sub-models on resources repartition. By sharing

information (and its uncertainty) between sub-models, decisions can be made

that account better for the whole knowledge of the AI system. This will be

extended further in the next chapters as RTS are the main focus of this

thesis.

2.8 Games characteristics

All the types of video games that we saw before require to deal with imperfect

information and sometimes with randomness, while elaborating a strategy (possibly

from underlying policies). From a game theoretic point of view, these video games are

close to what is called a Bayesian game* [Osborne and Rubinstein, 1994].

However, solving Bayesian games is non-trivial, there are no generic and

efficient approaches, and so it has not been done formally for card games with

more than a few cards. Billings et al. [2003] approximated a game theoretic

solution for Poker through abstraction heuristics, it leads to believe than game

theory can be applied at the higher (strategic) abstracted levels of video

games.

We do not pretend to do a complete taxonomy of video games and AI (e.g. [Gunn

et al., 2009]), but we wish to provide all the major informations to differentiate

game genres (gameplays). To grasp the challenges they pose, we will provide abstract

measures of complexity.

2.8.1 Combinatorics

“How does the state of possible actions grow?” To measure this, we used a measure

from perfect information zero-sum games (as Checkers, Chess and Go): the branching

factor* b and the depth d of a typical game. The complexity of a game (for taking a

decision) is proportional to bd. The average branching factor for a board game is easy

to compute: it is the average number of possible moves for a given player. For Poker,

we set b = 3 for fold, check and raise. d should then be defined over some time, the

average number of events (decisions) per hour in Poker is between 20 to 240. For

video-games, we defined b to be the average number of possible moves at

each decision, so for “continuous” or “real-time” games it is some kind of

function of the useful discretization of the virtual world at hand. d has to

be defined as a frequency at which a player (artificial or not) has to take

decisions to be competitive in the game, so we will give it in d∕time_unit.

For instance, for a car (plane) racing game, b ≈ 50 - 500 because b is a

combination of throttle (ẍ) and direction (θ) sampled values that are relevant

for the game world, with d∕min at least 60: a player needs to correct her

trajectory at least once a second. In RTS games, b ≈ 200 is a lower bound

(in StarCraft we may have between 50 to 400 units to control), and very

good amateurs and professional players perform more than 300 actions per

minute.

The sheer size of b and d in video games make it seem intractable, but

humans are able to play, and to play well. To explain this phenomenon,

we introduce “vertical” and “horizontal” continuities in decision making.

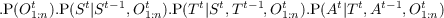

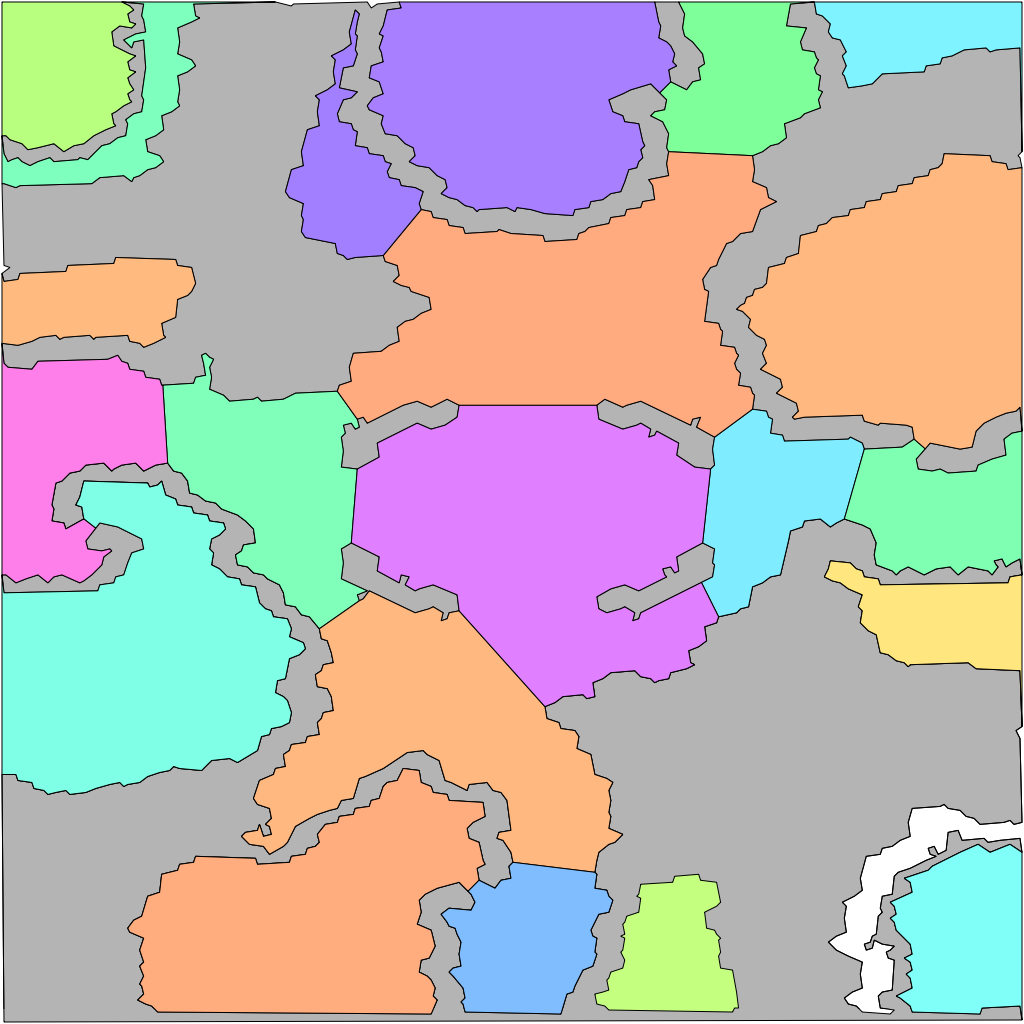

Fig. 2.6 shows how one can view the decision-making process in a video

game: at different time scales, the player has to choose between strategies to

follow, that can be realized with the help of different tactics. Finally, at

the action/output/motor level, these tactics have to be implemented one

way or another. So, matching Fig. 2.6, we could design a Bayesian model:

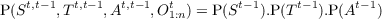

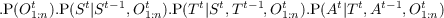

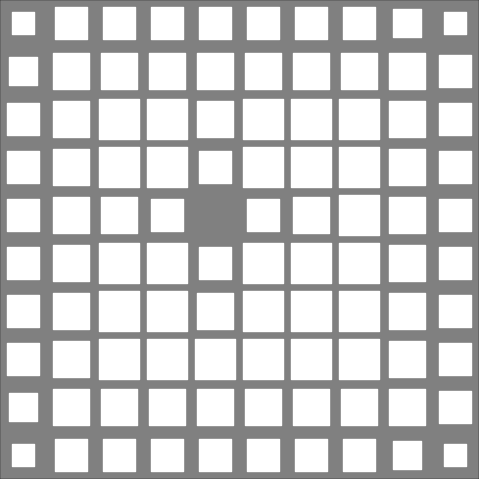

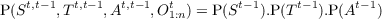

- St,t-1 ∈ Attack,Defend,Collect,Hide, the strategy variable

- Tt,t-1 ∈ Front,Back,Hit - and - run,Infiltrate, the tactics variable

- At,t-1 ∈ low_level_actions, the actions variable

- O1:nt ∈{observations}, the set of observations variables

- P(St|St-1,O1:nt) should be read as “the probability distribution on the

strategies at time t is determined/influenced by the strategy at time t- 1

and the observations at time t”.

- P(Tt|St,Tt-1,O1:nt) should be read as “the probability distribution on

the tactics at time t is determined by the strategy at time t, tactics at

time t - 1 and the observations at time t”.

- P(At|Tt,At-1,O1:nt) should be read as “the probability distribution on

the actions at time t is determined by tactics at time t, the actions at time

t - 1 and the observations at time t”.

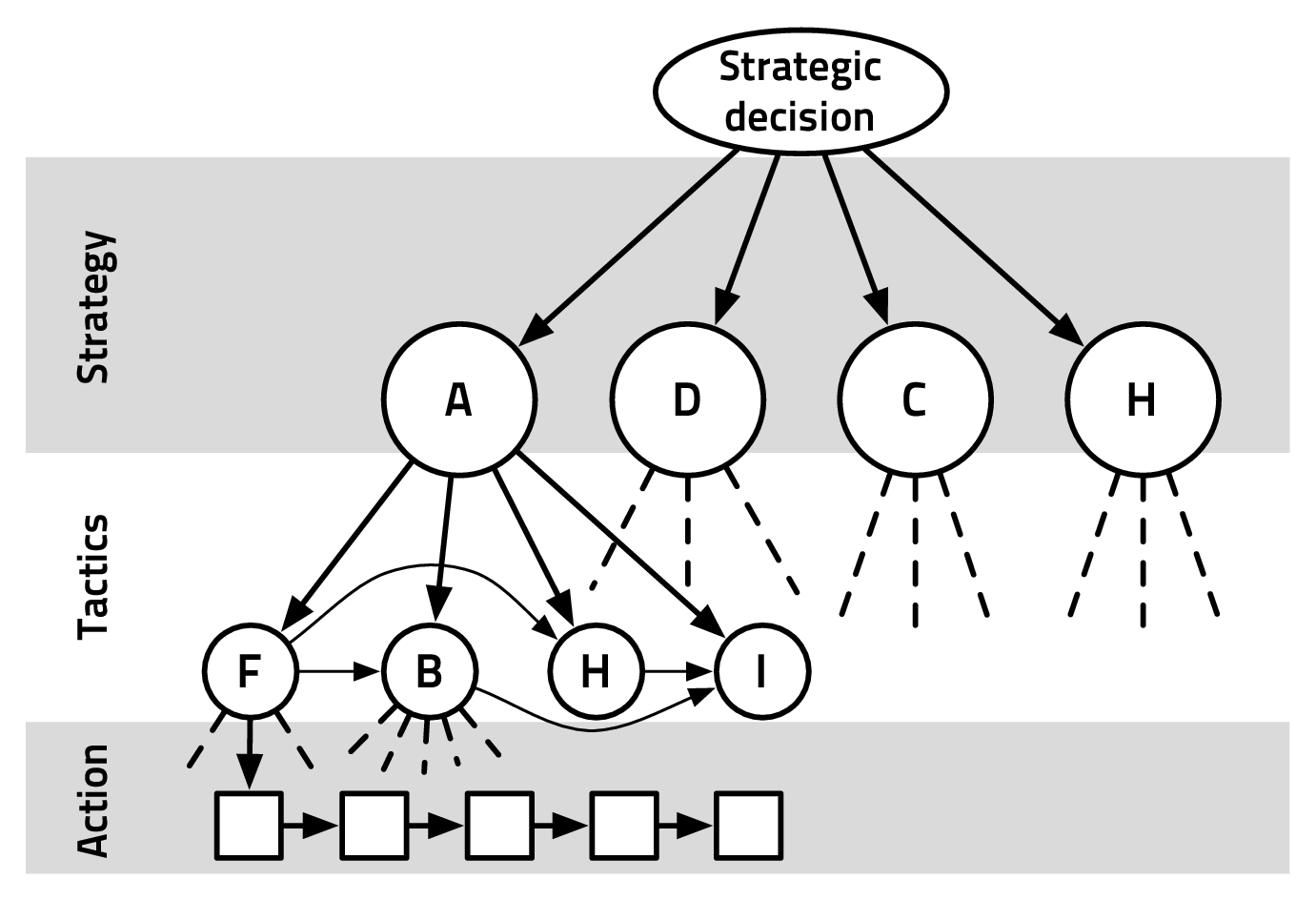

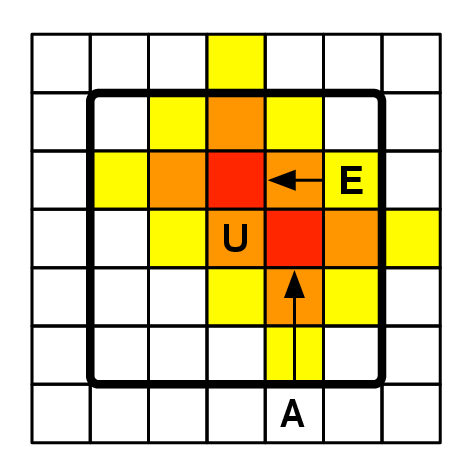

Vertical continuity

In the decision-making process, vertical continuity describes when taking a

higher-level decision implies a strong conditioning on lower-levels decisions. As seen

on Figure 2.7: higher level abstractions have a strong conditioning on lower levels.

For instance, and in non-probabilistic terms, if the choice of a strategy s (in the

domain of S) entails a strong reduction in the size of the domain of T, we consider

that there is a vertical continuity between S and T. There is vertical continuity

between S and T if ∀s ∈{S},{P(Tt|[St = s],Tt-1,O1:nt) > ϵ} is sparse in

{P(Tt|Tt-1,O1:nt) > ϵ}. There can be local vertical continuity, for which the

previous statement holds only for one (or a few) s ∈{S}, which are harder to

exploit. Recognizing vertical continuity allows us to explore the state space

efficiently, filtering out absurd considerations with regard to the higher decision

level(s).

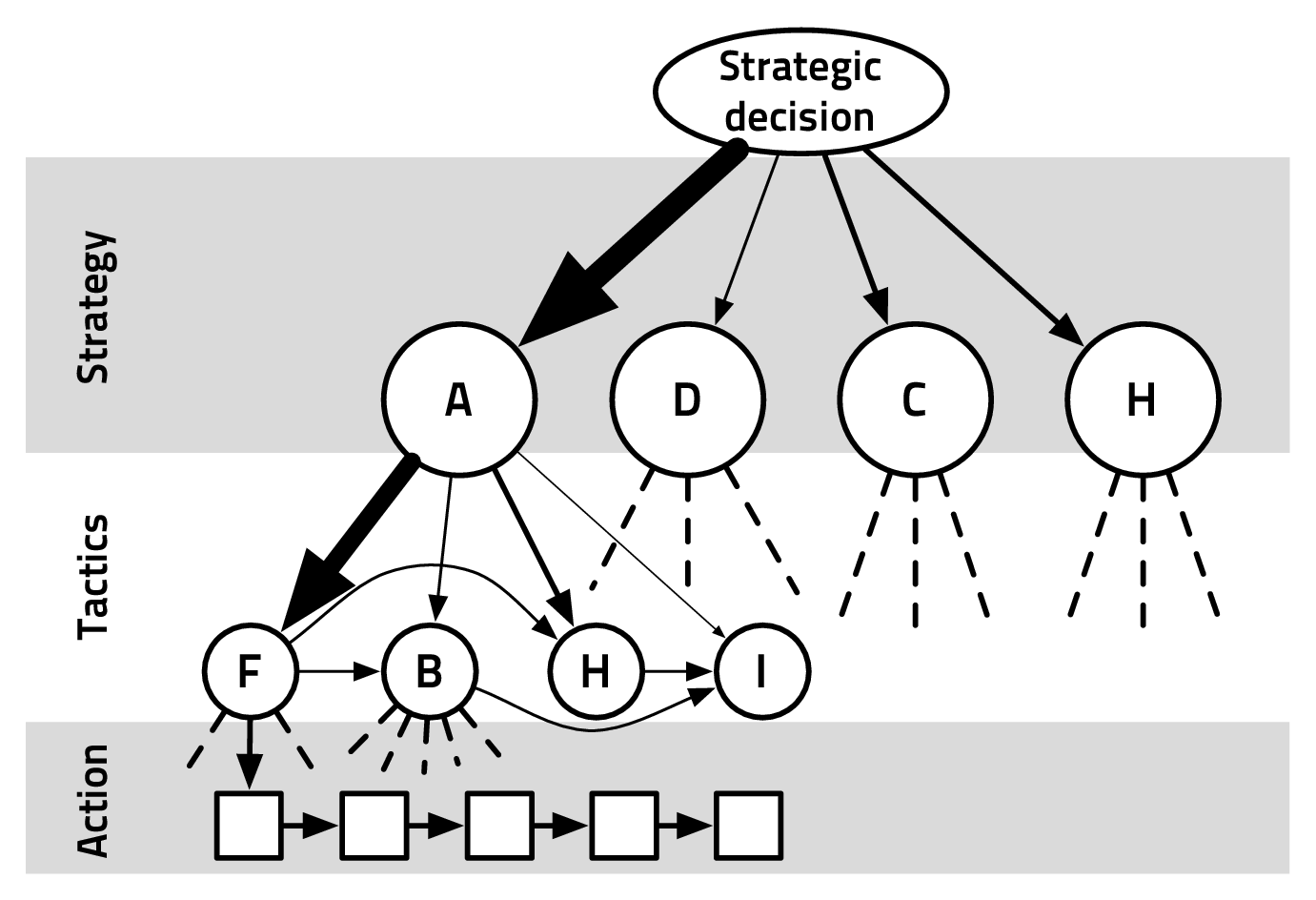

Horizontal continuity

Horizontal continuity also helps out cutting the search in the state space to only

relevant states. At a given abstraction level, it describes when taking a decision

implies a strong conditioning on the next time-step decision (for this given level). As

seen on Figure 2.8: previous decisions on a given level have a strong conditioning on

the next ones. For instance, and in non-probabilistic terms, if the choice of a tactic t

(in the domain of Tt) entails a strong reduction in the size of the domain of Tt+1, we

consider that T has horizontal continuity. There is horizontal continuity

between Tt-1 and Tt if ∀t ∈{T},{P(Tt|St,[Tt-1 = t],O1:nt) > ϵ} is sparse in

{P(Tt|St,O1:nt) > ϵ}. There can be local horizontal continuity, for which the

previous state holds only for one (or a few) t ∈{T}. Recognizing horizontal

continuity boils down to recognizing sequences of (frequent) actions from

decisions/actions dynamics and use that to reduce the search space of subsequent

decisions. Of course, at a sufficiently small time-step, most continuous games have

high horizontal continuity: the size of the time-step is strongly related to the design

of the abstraction levels for vertical continuity.

2.8.2 Randomness

Randomness can be inherent to the gameplay. In board games and table top

role-playing, randomness often comes from throwing dice(s) to decide the outcome of

actions. In decision-making theory, this induced stochasticity is dealt with in the

framework of a Markov decision process (MDP)*. A MDP is a tuple of (S,A,T,R)

with:

- S a finite set of states

- A a finite set of actions

- Ta(s,s′) = P([St+1 = s′]|[St = s],[At = a]) the probability that action a

in state s at time t will lead to state s′ at time t + 1

- Ra(s,s′) the immediate reward for going from state s to state s′ with

action a.

MDP can be solved through dynamic programming or the Bellman value iteration

algorithm [Bellman, 1957]. In video games, the sheer size of S and A make it

intractable to use MDP directly on the whole AI task, but they are used (in research)

either locally or at abstracted levels of decision. Randomness inherent to the process

is one of the sources of intentional uncertainty, and we can consider player’s

intentions in this stochastic framework. Modeling this source of uncertainty is part of

the challenge of writing game AI models.

2.8.3 Partial observations

Partial information is another source of randomness, which is found in shi-fu-mi,

poker, RTS* and FPS* games, to name a few. We will not go down to the fact that

the throwing of the dice seemed random because we only have partial information

about its physics, or of the seed of the deterministic random generator that produced

its outcome. Here, partial observations refer to the part of the game state which is

deliberatively hidden between players (from a gameplay point of view): hidden

hands in poker or hidden units in RTS* games due to the fog of war*. In

decision-making theory, partial observations are dealt with in the framework of a

partially observable Markov decision process (POMDP)* [Sondik, 1978].

A POMDP is a tuple (S,A,O,T,Ω,R) with S,A,T,R as in a MDP and:

- O a finite set of observations

- Ω conditional observations probabilities specifying: P(Ot+1|St+1,At)

In a POMDP, one cannot know exactly which state they are in and thus must reason

with a probability distribution on S. Ω is used to update the distribution

on S (the belief) uppon taking the action a and observing o, we have:

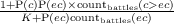

P([St+1 = s′]) ∝ Ω(o|s′,a).∑

s∈STa(s,s′).P([St = s]). In game AI, POMDP are

computationally intractable for most problems, and thus we need to model

more carefully (without full Ω and T) the dynamics and the information

value.

2.8.4 Time constant(s)

For novice to video games, we give some orders of magnitudes of the time constants

involved. Indeed, we present here only real-time video games and time constants are

central to comprehension of the challenges at hand. In all games, the player is taking

actions continuously sampled at the minimum of the human interface device (mouse,

keyboard, pad) refreshment rate and the game engine loop: at least 30Hz.

In most racing games, there is a quite high continuity in the input which

is constrained by the dynamics of movements. In other games, there are

big discontinuities, even if fast FPS* control resembles racing games a lot.

RTS* professional gamers are giving inputs at ≈ 300 actions per minute

(APM*).

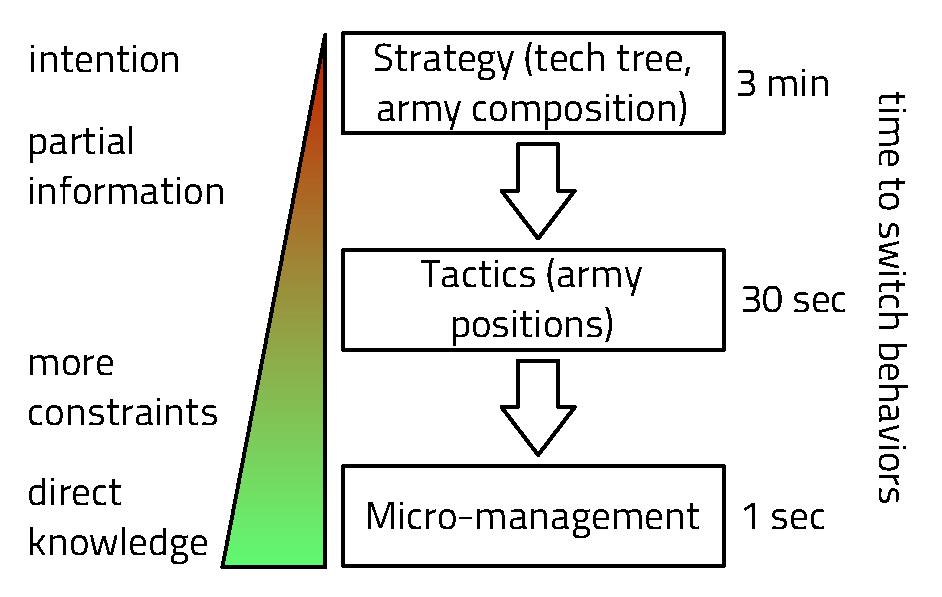

There are also different time constants for a strategic switch to take

effect. In RTS games, it may vary between the build duration of at least one

building and one unit (1-2 minutes) to a lot more (5 minutes). In an RPG or

a team FPS, it may even be longer (up to one full round or one game by

requiring to change the composition of the team and/or the spells) or shorter

by depending on the game mode. For tactical moves, we consider that the

time for a decision to have effects is proportional to the mean time for a

squad to go from the middle of the map (arena) to anywhere else. In RTS

games, this is usually between 20 seconds to 2 minutes. Maps variability

between RPG* titles and FPS* titles is high, but we can give an estimate of

tactical moves to use between 10 seconds (fast FPS) to 5 minutes (some FPS,

RPG).

2.8.5 Recapitulation

We present the main qualitative results for big classes of gameplays (with examples)

in a table page 81.

| quantization in increasing order: no, negligible, few, some, moderate, much

|

|

|

|

|

|

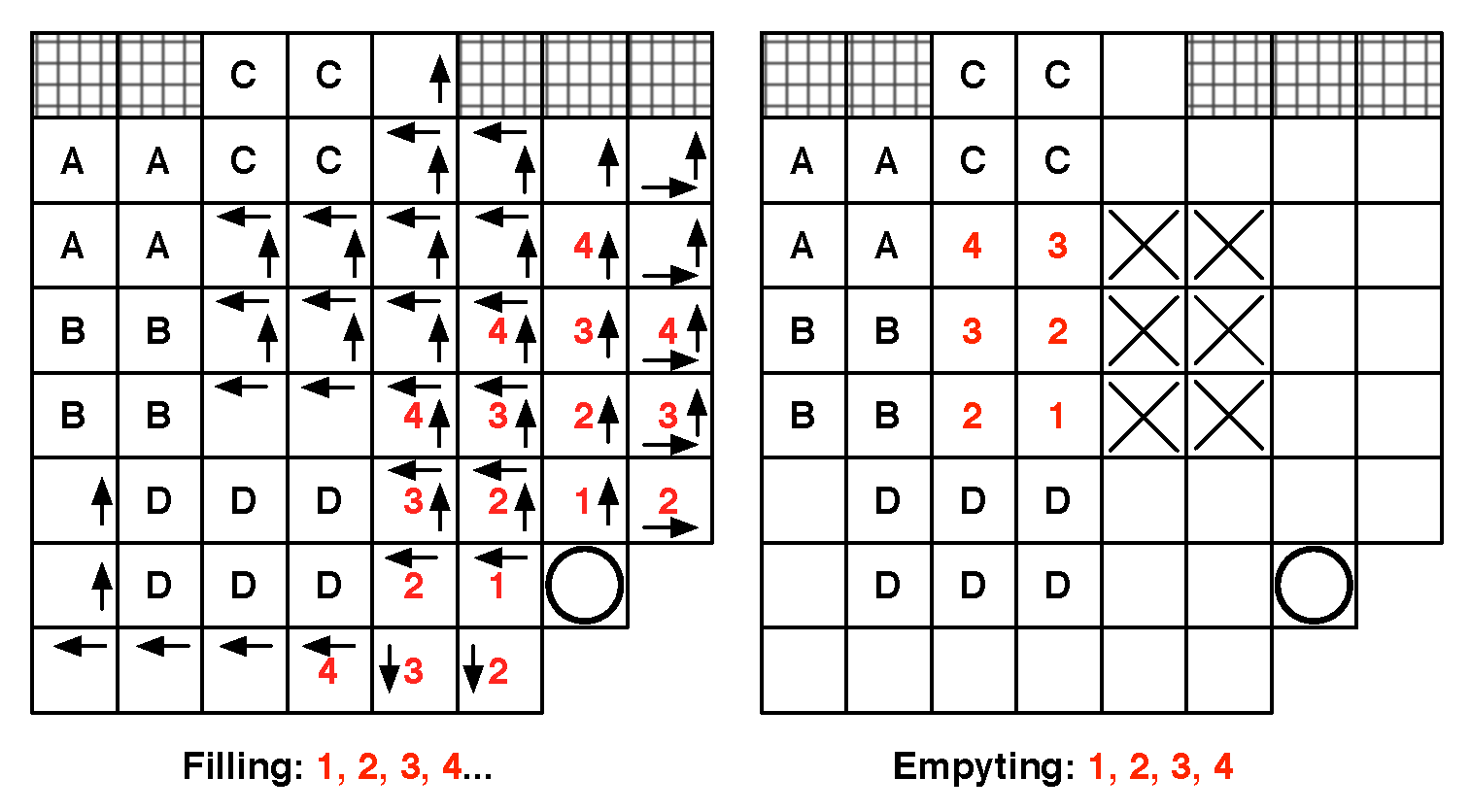

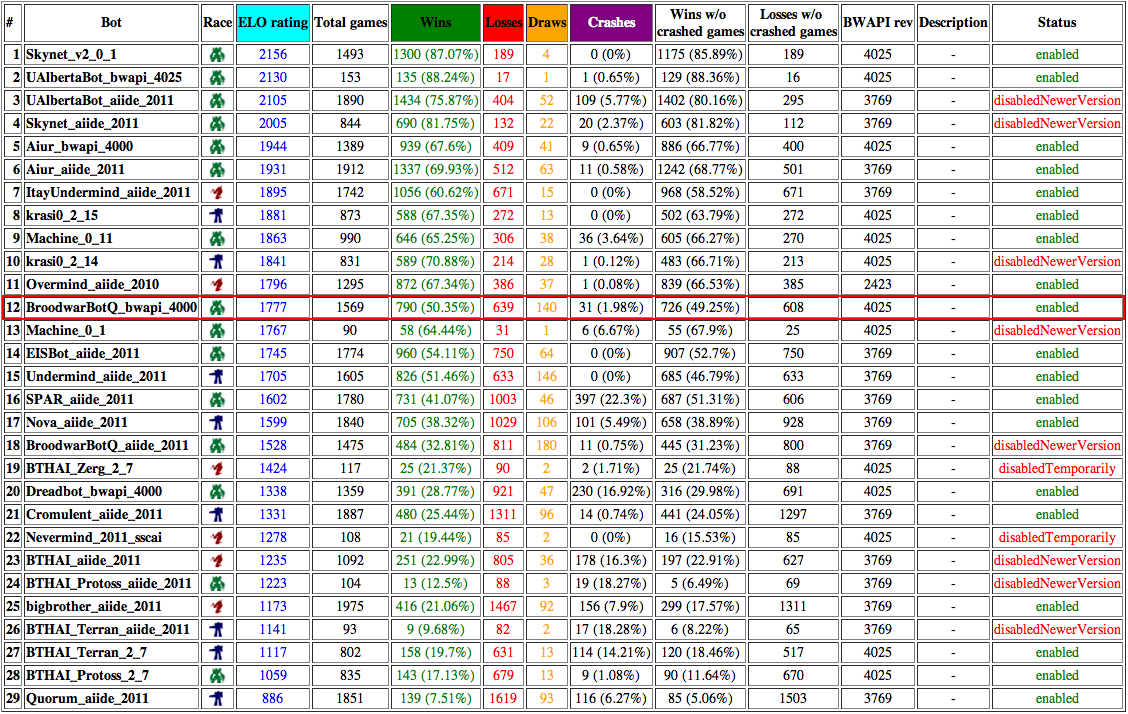

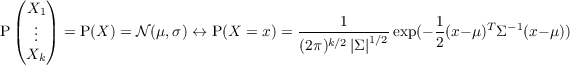

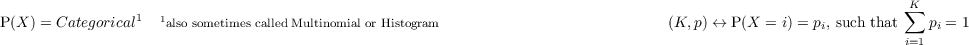

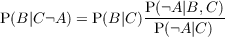

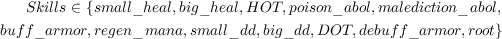

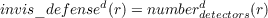

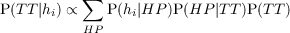

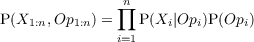

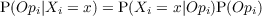

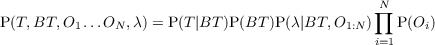

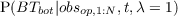

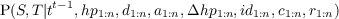

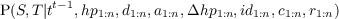

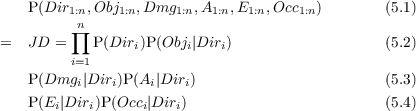

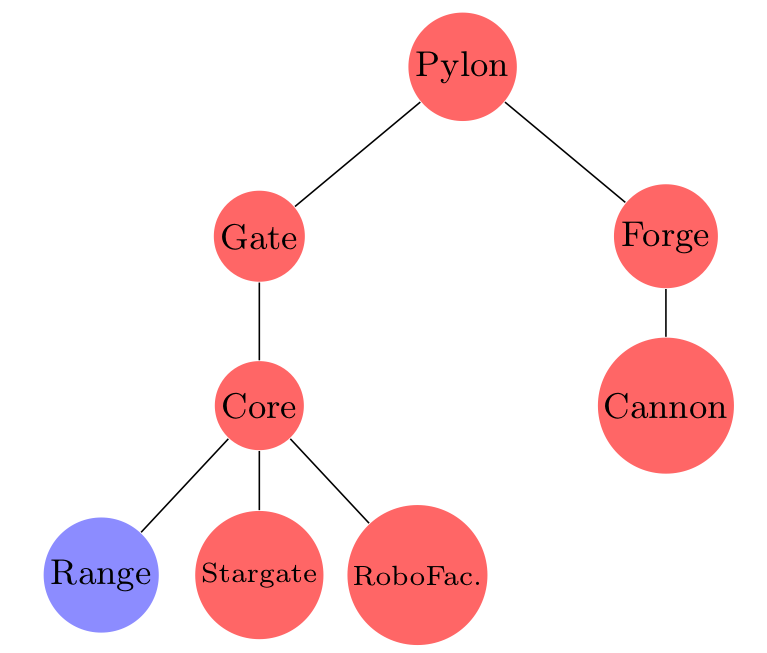

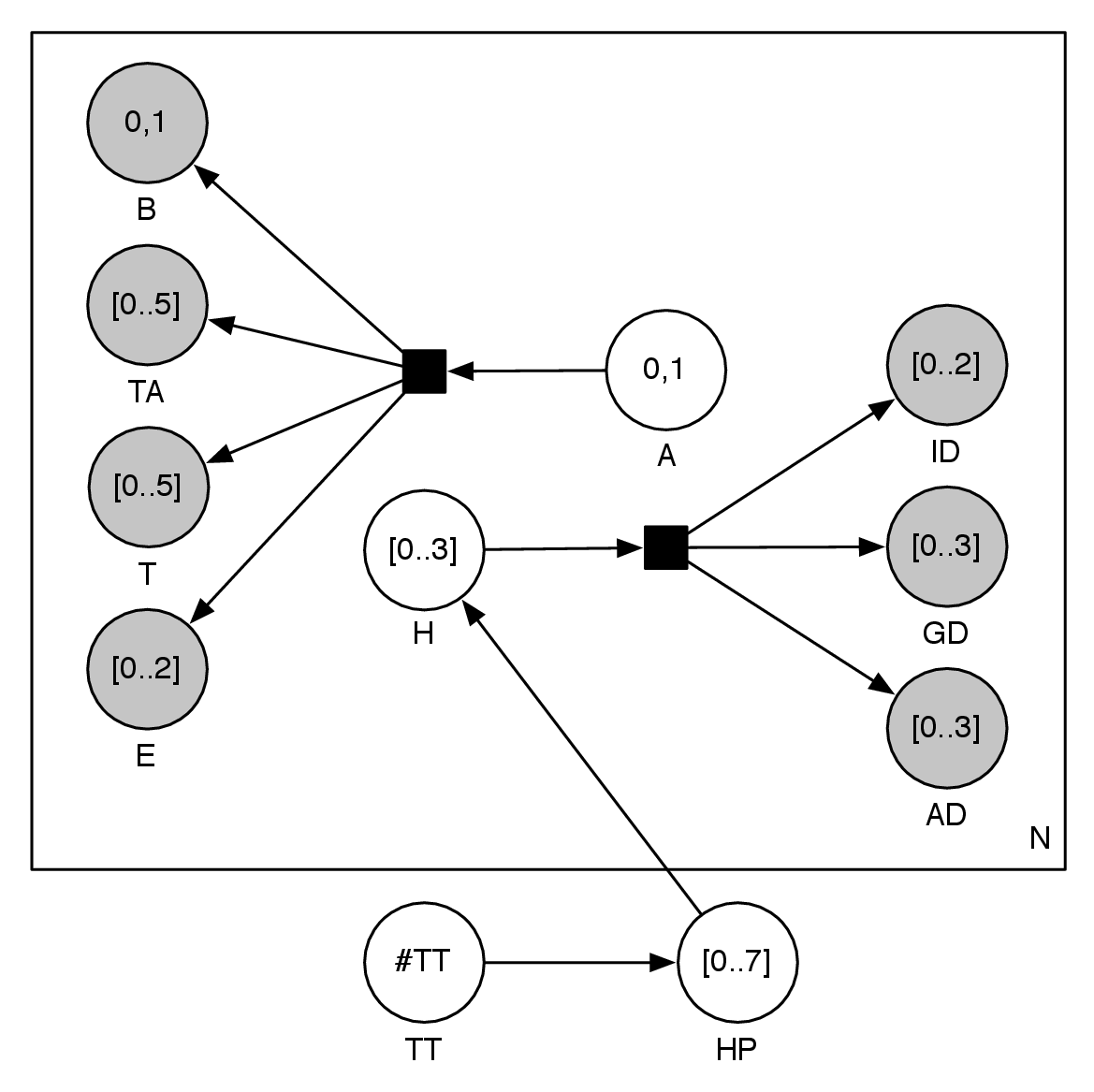

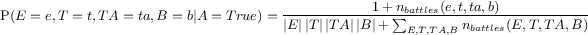

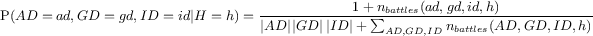

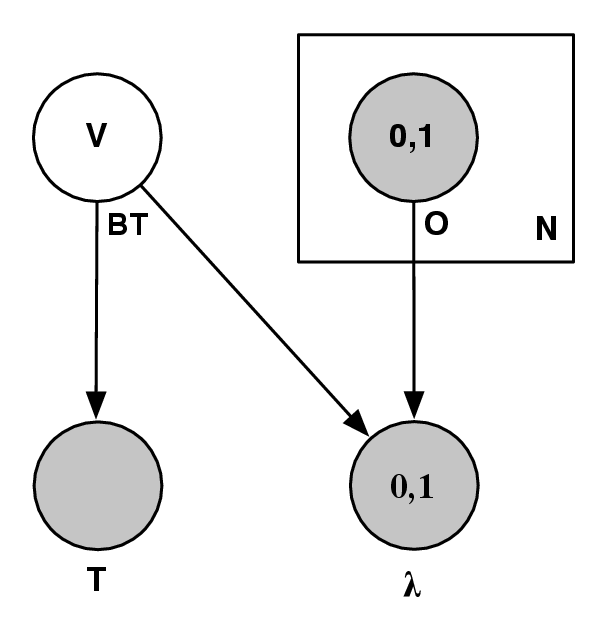

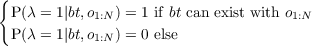

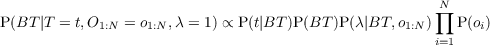

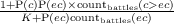

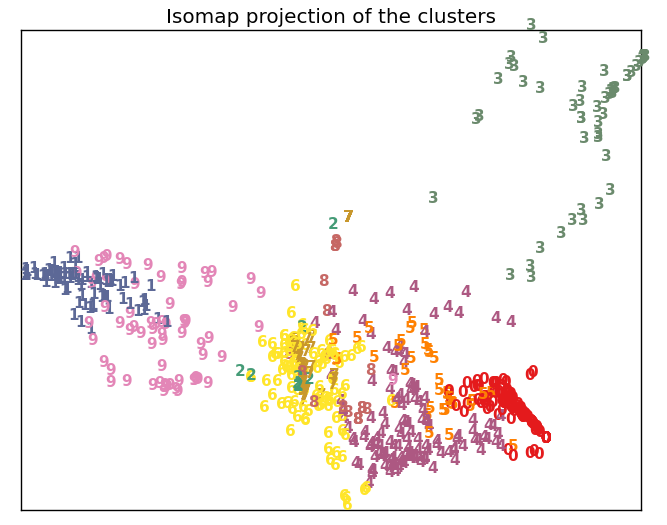

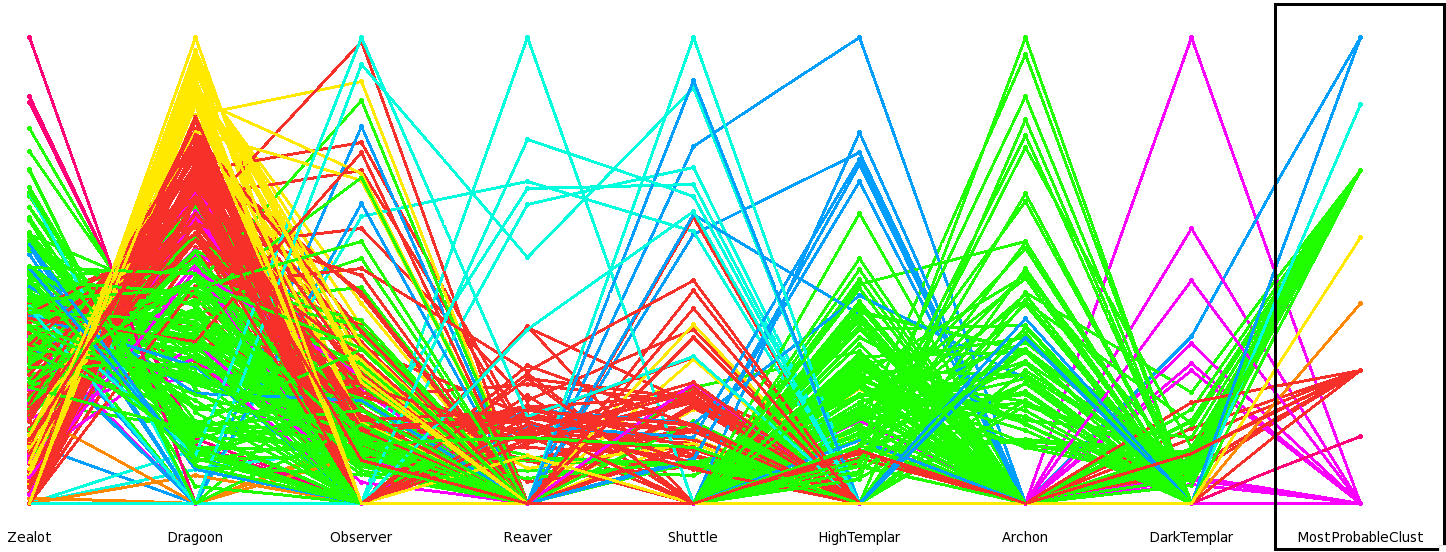

|